To make your video chat app truly interactive, it’s important to integrate screen share into your Flutter video chat. Whether it’s teachers showing lessons, coworkers reviewing documents, or friends sharing photos, screen sharing turns a simple video call into a powerful collaboration tool.

Flutter lets you build for both Android and iOS, but adding screen sharing requires platform-specific handling. Since Android and iOS use different methods to capture the screen, you’ll need to bridge Flutter with native code on each platform to capture, encode, and stream the screen content in real time.

In this guide, we’ll walk you through how to implement screen sharing in your Flutter video chat app, step by step with ZEGOCLOUD.

How to Integrate Screen Share into Flutter Video Chat App

Flutter handles video rendering differently than native mobile apps. Most video calling packages struggle with switching between camera feeds and screen content smoothly. You need to manage platform views, texture renderers, and video source switching without breaking the existing video call flow.

ZEGOCLOUD’s Flutter SDK solves these rendering challenges with built-in screen capture support. We’ll add screen sharing to a working Flutter video chat app using our unified APIs. The implementation works on both iOS and Android without writing separate native code for each platform.

You’ll capture device screens, stream that content to other users, and handle the complex video source switching automatically. The complete solution includes permission management, UI controls, and error handling that works reliably across different devices.

Prerequisites

Before building the screen sharing functionality, ensure you have these essential components:

- ZEGOCLOUD developer account – sign up

- Flutter 2.0 or later with stable channel recommended.

- Valid AppID and ServerSecret credentials from ZEGOCLOUD admin console.

- iOS 12.0+ devices for iOS testing (iPhone X or newer recommended for performance.)

- Android 5.0+ devices with screen recording capabilities enabled.

- Active internet connection for real-time streaming.

- Basic Flutter development experience with state management.

For optimal testing results, use physical devices rather than simulators since screen capture requires actual hardware capabilities that emulators cannot provide reliably.

Step 1. Flutter Project Setup and Dependencies

Start by creating a new Flutter project or opening your existing video chat application. Configure the project structure to support cross-platform screen sharing capabilities. Follow the steps below to do so:

- Create a new Flutter project if you don’t have one already:

flutter create flutter_screen_share

cd flutter_screen_share- Add the ZEGOCLOUD Express Engine dependency to your pubspec.yaml file:

dependencies:

flutter:

sdk: flutter

zego_express_engine: ^3.14.5

permission_handler: ^10.4.3

dev_dependencies:

flutter_test:

sdk: flutter

flutter_lints: ^2.0.0- Install the dependencies by running the package installation command:

flutter pub getThe permission_handler package helps manage runtime permissions across both platforms, while the ZEGO Express Engine provides all video calling and screen sharing functionality.

Step 2. Platform Permission Configuration

Configure permissions for both Android and iOS to enable camera access, microphone usage, and screen recording capabilities.

Android

Update your Android manifest file at android/app/src/main/AndroidManifest.xml:

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.example.flutter_screen_share">

<!-- Basic video call permissions -->

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.ACCESS_WIFI_STATE" />

<uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS" />

<uses-permission android:name="android.permission.BLUETOOTH" />

<!-- Screen sharing specific permissions -->

<uses-permission android:name="android.permission.FOREGROUND_SERVICE" />

<uses-permission android:name="android.permission.FOREGROUND_SERVICE_MEDIA_PROJECTION" />

<uses-permission android:name="android.permission.SYSTEM_ALERT_WINDOW" />

<!-- Hardware requirements -->

<uses-feature android:name="android.hardware.camera" android:required="true" />

<uses-feature android:name="android.hardware.camera.autofocus" />

<uses-feature android:glEsVersion="0x00020000" android:required="true" />

<application

android:label="Flutter Screen Share"

android:icon="@mipmap/ic_launcher">

<activity

android:name=".MainActivity"

android:exported="true"

android:launchMode="singleTop"

android:theme="@style/LaunchTheme"

android:configChanges="orientation|keyboardHidden|keyboard|screenSize|smallestScreenSize|locale|layoutDirection|fontScale|screenLayout|density|uiMode"

android:hardwareAccelerated="true"

android:windowSoftInputMode="adjustResize">

<intent-filter android:autoVerify="true">

<action android:name="android.intent.action.MAIN"/>

<category android:name="android.intent.category.LAUNCHER"/>

</intent-filter>

</activity>

</application>

</manifest>iOS

Configure iOS permissions in ios/Runner/Info.plist:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<!-- Existing Flutter configuration -->

<!-- Camera and microphone permissions -->

<key>NSCameraUsageDescription</key>

<string>This app needs camera access for video calls</string>

<key>NSMicrophoneUsageDescription</key>

<string>This app needs microphone access for voice and video calls</string>

<!-- Screen recording permission (iOS 12+) -->

<key>NSScreenRecordingUsageDescription</key>

<string>This app needs screen recording permission to share your screen</string>

<!-- Background modes for screen sharing -->

<key>UIBackgroundModes</key>

<array>

<string>audio</string>

<string>voip</string>

</array>

<!-- Enable camera access -->

<key>io.flutter.embedded_views_preview</key>

<true/>

</dict>

</plist>These permissions ensure your app can access all necessary hardware features for comprehensive screen sharing functionality across both platforms.

Step 3. User Interface Design and Layout

Create an intuitive interface that supports both video calling and screen sharing controls. Design the layout to accommodate switching between camera and screen content seamlessly.

Create the main video call screen in lib/screens/video_call_screen.dart:

import 'package:flutter/material.dart';

import 'package:zego_express_engine/zego_express_engine.dart';

class VideoCallScreen extends StatefulWidget {

final String roomId;

final String userId;

const VideoCallScreen({

super.key,

required this.roomId,

required this.userId,

});

@override

State<VideoCallScreen> createState() => _VideoCallScreenState();

}

class _VideoCallScreenState extends State<VideoCallScreen> {

Widget? localVideoWidget;

Widget? remoteVideoWidget;

bool isCallActive = false;

bool isScreenSharing = false;

bool isMicMuted = false;

bool isCameraEnabled = true;

int? localViewID;

int? remoteViewID;

@override

Widget build(BuildContext context) {

return Scaffold(

backgroundColor: Colors.black,

body: SafeArea(

child: Stack(

children: [

// Main video display area

Positioned.fill(

child: localVideoWidget ?? Container(

color: Colors.grey[900],

child: const Center(

child: Icon(

Icons.videocam_off,

size: 64,

color: Colors.white54,

),

),

),

),

// Remote user video overlay

if (remoteVideoWidget != null)

Positioned(

top: 40,

right: 20,

child: Container(

width: 120,

height: 160,

decoration: BoxDecoration(

border: Border.all(color: Colors.white, width: 2),

borderRadius: BorderRadius.circular(12),

),

child: ClipRRect(

borderRadius: BorderRadius.circular(10),

child: remoteVideoWidget,

),

),

),

// Screen sharing indicator

if (isScreenSharing)

Positioned(

top: 60,

left: 20,

child: Container(

padding: const EdgeInsets.symmetric(horizontal: 12, vertical: 6),

decoration: BoxDecoration(

color: Colors.red,

borderRadius: BorderRadius.circular(16),

),

child: const Row(

mainAxisSize: MainAxisSize.min,

children: [

Icon(Icons.screen_share, color: Colors.white, size: 16),

SizedBox(width: 4),

Text(

'Sharing Screen',

style: TextStyle(color: Colors.white, fontSize: 12),

),

],

),

),

),

// Room ID display

Positioned(

top: 60,

left: 20,

child: Container(

padding: const EdgeInsets.symmetric(horizontal: 12, vertical: 6),

decoration: BoxDecoration(

color: Colors.black54,

borderRadius: BorderRadius.circular(16),

),

child: Text(

'Room: ${widget.roomId}',

style: const TextStyle(color: Colors.white, fontSize: 12),

),

),

),

// Control buttons

Positioned(

bottom: 40,

left: 20,

right: 20,

child: Row(

mainAxisAlignment: MainAxisAlignment.spaceEvenly,

children: [

// Microphone toggle

CircleAvatar(

radius: 28,

backgroundColor: isMicMuted ? Colors.red : Colors.white24,

child: IconButton(

onPressed: toggleMicrophone,

icon: Icon(

isMicMuted ? Icons.mic_off : Icons.mic,

color: Colors.white,

),

),

),

// Screen share toggle

CircleAvatar(

radius: 28,

backgroundColor: isScreenSharing ? Colors.blue : Colors.white24,

child: IconButton(

onPressed: isCallActive ? toggleScreenShare : null,

icon: Icon(

isScreenSharing ? Icons.stop_screen_share : Icons.screen_share,

color: Colors.white,

),

),

),

// Camera toggle

CircleAvatar(

radius: 28,

backgroundColor: !isCameraEnabled ? Colors.red : Colors.white24,

child: IconButton(

onPressed: toggleCamera,

icon: Icon(

isCameraEnabled ? Icons.videocam : Icons.videocam_off,

color: Colors.white,

),

),

),

// End call

CircleAvatar(

radius: 28,

backgroundColor: Colors.red,

child: IconButton(

onPressed: endCall,

icon: const Icon(

Icons.call_end,

color: Colors.white,

),

),

),

],

),

),

],

),

),

);

}

// Placeholder methods - will be implemented in following steps

void toggleMicrophone() {}

void toggleScreenShare() {}

void toggleCamera() {}

void endCall() {}

}Step 4. ZEGO Engine Initialization and Configuration

Initialize the ZEGO Express Engine with proper configuration for screen sharing capabilities. Set up the foundational video calling infrastructure.

Create a service class for managing ZEGO engine operations in lib/services/zego_service.dart:

import 'package:flutter/foundation.dart';

import 'package:zego_express_engine/zego_express_engine.dart';

class ZegoService {

static const int appID = your_app_id_here; // Replace with your actual App ID

static const String appSign = 'your_app_sign_here'; // Replace with your App Sign

static ZegoService? _instance;

static ZegoService get instance => _instance ??= ZegoService._internal();

ZegoService._internal();

bool _isEngineCreated = false;

ZegoScreenCaptureSource? _screenCaptureSource;

Future<void> initializeEngine() async {

if (_isEngineCreated) return;

try {

// Create engine profile optimized for video calling and screen sharing

final profile = ZegoEngineProfile(

appID,

ZegoScenario.Default,

appSign: kIsWeb ? null : appSign,

enablePlatformView: true, // Required for video rendering

);

// Initialize the ZEGO Express Engine

await ZegoExpressEngine.createEngineWithProfile(profile);

_isEngineCreated = true;

// Set up basic engine configuration

await _configureEngine();

debugPrint('ZEGO Engine initialized successfully');

} catch (error) {

debugPrint('Failed to initialize ZEGO Engine: $error');

rethrow;

}

}

Future<void> _configureEngine() async {

// Enable hardware acceleration for better performance

await ZegoExpressEngine.instance.enableHardwareEncoder(true);

// Configure audio settings for optimal voice quality

await ZegoExpressEngine.instance.setAudioConfig(

const ZegoAudioConfig(ZegoAudioBitrate.Default, ZegoAudioChannel.Mono),

);

// Set video configuration for screen sharing

await ZegoExpressEngine.instance.setVideoConfig(

const ZegoVideoConfig(

720, 1280, // Resolution

15, // Frame rate

1200, // Bitrate

ZegoVideoCodecID.Default,

),

);

}

Future<ZegoScreenCaptureSource?> createScreenCaptureSource() async {

if (!_isEngineCreated) {

throw Exception('ZEGO Engine not initialized');

}

try {

_screenCaptureSource = await ZegoExpressEngine.instance.createScreenCaptureSource();

debugPrint('Screen capture source created successfully');

return _screenCaptureSource;

} catch (error) {

debugPrint('Failed to create screen capture source: $error');

return null;

}

}

Future<void> destroyEngine() async {

if (!_isEngineCreated) return;

try {

// Clean up screen capture source

if (_screenCaptureSource != null) {

await _screenCaptureSource!.stopCapture();

_screenCaptureSource = null;

}

// Destroy the engine and release resources

await ZegoExpressEngine.destroyEngine();

_isEngineCreated = false;

debugPrint('ZEGO Engine destroyed successfully');

} catch (error) {

debugPrint('Error destroying ZEGO Engine: $error');

}

}

bool get isEngineCreated => _isEngineCreated;

ZegoScreenCaptureSource? get screenCaptureSource => _screenCaptureSource;

}Step 5. Permission Management and Runtime Requests

Implement comprehensive permission handling that gracefully requests and manages the various permissions required for video calling and screen sharing.

Create a permission manager in lib/services/permission_service.dart:

import 'package:flutter/services.dart';

import 'package:permission_handler.dart';

class PermissionService {

static const _methodChannel = MethodChannel('screen_share_permissions');

static Future<bool> requestBasicPermissions() async {

final permissions = [

Permission.camera,

Permission.microphone,

];

final statuses = await permissions.request();

bool allGranted = true;

for (final permission in permissions) {

if (statuses[permission] != PermissionStatus.granted) {

allGranted = false;

break;

}

}

return allGranted;

}

static Future<bool> requestScreenRecordingPermission() async {

try {

// For Android, screen recording permission is handled by MediaProjection

// For iOS, this checks for screen recording capability

if (Platform.isAndroid) {

return await _requestAndroidScreenPermission();

} else if (Platform.isIOS) {

return await _requestIOSScreenPermission();

}

return false;

} catch (error) {

debugPrint('Error requesting screen recording permission: $error');

return false;

}

}

static Future<bool> _requestAndroidScreenPermission() async {

try {

// Android MediaProjection permission is requested through the ZEGO SDK

// when starting screen capture

return true;

} catch (error) {

debugPrint('Android screen permission error: $error');

return false;

}

}

static Future<bool> _requestIOSScreenPermission() async {

try {

// iOS screen recording permission is handled through system dialogs

// Check if screen recording is available

final result = await _methodChannel.invokeMethod('checkScreenRecordingPermission');

return result as bool? ?? false;

} catch (error) {

debugPrint('iOS screen permission error: $error');

return false;

}

}

static Future<PermissionStatus> getPermissionStatus(Permission permission) async {

return await permission.status;

}

static Future<bool> openAppSettings() async {

return await openAppSettings();

}

}Add platform-specific permission checking for iOS by creating ios/Runner/PermissionMethodChannel.swift:

import Flutter

import UIKit

import ReplayKit

@UIApplicationMain

@objc class AppDelegate: FlutterAppDelegate {

override func application(

_ application: UIApplication,

didFinishLaunchingWithOptions launchOptions: [UIApplication.LaunchOptionsKey: Any]?

) -> Bool {

let controller : FlutterViewController = window?.rootViewController as! FlutterViewController

let permissionChannel = FlutterMethodChannel(name: "screen_share_permissions",

binaryMessenger: controller.binaryMessenger)

permissionChannel.setMethodCallHandler({

(call: FlutterMethodCall, result: @escaping FlutterResult) -> Void in

switch call.method {

case "checkScreenRecordingPermission":

self.checkScreenRecordingPermission(result: result)

default:

result(FlutterMethodNotImplemented)

}

})

GeneratedPluginRegistrant.register(with: self)

return super.application(application, didFinishLaunchingWithOptions: launchOptions)

}

private func checkScreenRecordingPermission(result: @escaping FlutterResult) {

if #available(iOS 12.0, *) {

// Check if screen recording is available

result(RPScreenRecorder.shared().isAvailable)

} else {

result(false)

}

}

}Step 6. Core Video Calling Implementation

Build the foundational video calling functionality that will serve as the base for screen sharing features. Implement room management, stream publishing, and stream playing.

Extend the video call screen with core calling functionality:

// Add these imports to the existing video_call_screen.dart

import '../services/zego_service.dart';

import '../services/permission_service.dart';

// Add these methods to the _VideoCallScreenState class

class _VideoCallScreenState extends State<VideoCallScreen> {

// ... existing widget code ...

@override

void initState() {

super.initState();

initializeVideoCall();

}

Future<void> initializeVideoCall() async {

try {

// Request basic permissions first

final permissionsGranted = await PermissionService.requestBasicPermissions();

if (!permissionsGranted) {

_showPermissionDialog();

return;

}

// Initialize ZEGO engine

await ZegoService.instance.initializeEngine();

// Set up event handlers

setupZegoEventHandlers();

// Start the video call

await startVideoCall();

} catch (error) {

debugPrint('Error initializing video call: $error');

_showErrorDialog('Failed to initialize video call: $error');

}

}

void setupZegoEventHandlers() {

// Handle room state changes

ZegoExpressEngine.onRoomStateUpdate = (roomID, state, errorCode, extendedData) {

debugPrint('Room state update: $state, error: $errorCode');

if (mounted) {

setState(() {

isCallActive = (state == ZegoRoomState.Connected);

});

if (errorCode != 0) {

_showErrorDialog('Room connection error: $errorCode');

}

}

};

// Handle stream updates from other participants

ZegoExpressEngine.onRoomStreamUpdate = (roomID, updateType, streamList, extendedData) {

debugPrint('Stream update: $updateType, streams: ${streamList.length}');

if (mounted) {

for (final stream in streamList) {

if (updateType == ZegoUpdateType.Add) {

startPlayingRemoteStream(stream.streamID);

} else {

stopPlayingRemoteStream(stream.streamID);

}

}

}

};

// Handle user presence updates

ZegoExpressEngine.onRoomUserUpdate = (roomID, updateType, userList) {

debugPrint('User update: $updateType, users: ${userList.length}');

for (final user in userList) {

final action = updateType == ZegoUpdateType.Add ? 'joined' : 'left';

_showSnackBar('${user.userName} $action the call');

}

};

// Handle publishing state changes

ZegoExpressEngine.onPublisherStateUpdate = (streamID, state, errorCode, extendedData) {

debugPrint('Publisher state: $state, error: $errorCode');

if (errorCode != 0) {

_showErrorDialog('Publishing error: $errorCode');

}

};

}

Future<void> startVideoCall() async {

try {

// Create user object

final user = ZegoUser(widget.userId, widget.userId);

// Configure room settings

final roomConfig = ZegoRoomConfig.defaultConfig()

..isUserStatusNotify = true;

// Join the room

final loginResult = await ZegoExpressEngine.instance.loginRoom(

widget.roomId,

user,

config: roomConfig,

);

if (loginResult.errorCode == 0) {

// Start local preview

await startLocalPreview();

// Start publishing local stream

await startPublishingStream();

setState(() {

isCallActive = true;

});

_showSnackBar('Video call started successfully');

} else {

throw Exception('Failed to join room: ${loginResult.errorCode}');

}

} catch (error) {

debugPrint('Error starting video call: $error');

_showErrorDialog('Failed to start video call: $error');

}

}

Future<void> startLocalPreview() async {

try {

final widget = await ZegoExpressEngine.instance.createCanvasView((viewID) {

localViewID = viewID;

final canvas = ZegoCanvas(viewID, viewMode: ZegoViewMode.AspectFill);

ZegoExpressEngine.instance.startPreview(canvas: canvas);

});

if (mounted) {

setState(() {

localVideoWidget = widget;

});

}

} catch (error) {

debugPrint('Error starting local preview: $error');

}

}

Future<void> startPublishingStream() async {

try {

final streamID = '${widget.roomId}_${widget.userId}_camera';

await ZegoExpressEngine.instance.startPublishingStream(streamID);

debugPrint('Started publishing stream: $streamID');

} catch (error) {

debugPrint('Error publishing stream: $error');

}

}

Future<void> startPlayingRemoteStream(String streamID) async {

try {

final widget = await ZegoExpressEngine.instance.createCanvasView((viewID) {

remoteViewID = viewID;

final canvas = ZegoCanvas(viewID, viewMode: ZegoViewMode.AspectFill);

ZegoExpressEngine.instance.startPlayingStream(streamID, canvas: canvas);

});

if (mounted) {

setState(() {

remoteVideoWidget = widget;

});

}

} catch (error) {

debugPrint('Error playing remote stream: $error');

}

}

Future<void> stopPlayingRemoteStream(String streamID) async {

try {

await ZegoExpressEngine.instance.stopPlayingStream(streamID);

if (remoteViewID != null) {

await ZegoExpressEngine.instance.destroyCanvasView(remoteViewID!);

}

if (mounted) {

setState(() {

remoteVideoWidget = null;

remoteViewID = null;

});

}

} catch (error) {

debugPrint('Error stopping remote stream: $error');

}

}

// Helper methods for UI feedback

void _showSnackBar(String message) {

ScaffoldMessenger.of(context).showSnackBar(

SnackBar(content: Text(message), duration: const Duration(seconds: 2)),

);

}

void _showErrorDialog(String message) {

showDialog(

context: context,

builder: (context) => AlertDialog(

title: const Text('Error'),

content: Text(message),

actions: [

TextButton(

onPressed: () => Navigator.of(context).pop(),

child: const Text('OK'),

),

],

),

);

}

void _showPermissionDialog() {

showDialog(

context: context,

builder: (context) => AlertDialog(

title: const Text('Permissions Required'),

content: const Text('Camera and microphone permissions are required for video calls.'),

actions: [

TextButton(

onPressed: () => Navigator.of(context).pop(),

child: const Text('Cancel'),

),

TextButton(

onPressed: () {

Navigator.of(context).pop();

PermissionService.openAppSettings();

},

child: const Text('Settings'),

),

],

),

);

}

}Step 7. Screen Sharing Implementation

Implement the screen sharing functionality that allows users to switch between camera and screen content during video calls.

Add screen sharing methods to the video call screen:

// Add these methods to _VideoCallScreenState class

Future<void> toggleScreenShare() async {

if (!isCallActive) {

_showSnackBar('Start a video call first');

return;

}

try {

if (isScreenSharing) {

await stopScreenSharing();

} else {

await startScreenSharing();

}

} catch (error) {

debugPrint('Error toggling screen share: $error');

_showErrorDialog('Screen sharing error: $error');

}

}

Future<void> startScreenSharing() async {

try {

// Request screen recording permission first

final hasPermission = await PermissionService.requestScreenRecordingPermission();

if (!hasPermission) {

_showErrorDialog('Screen recording permission is required');

return;

}

// Create screen capture source if not exists

var screenSource = ZegoService.instance.screenCaptureSource;

if (screenSource == null) {

screenSource = await ZegoService.instance.createScreenCaptureSource();

if (screenSource == null) {

throw Exception('Failed to create screen capture source');

}

}

// Switch video source to screen capture

await ZegoExpressEngine.instance.setVideoSource(

ZegoVideoSourceType.ScreenCapture,

instanceID: screenSource.getIndex(),

channel: ZegoPublishChannel.Main,

);

// Configure screen capture settings

final config = ZegoScreenCaptureConfig(

true, // Capture video

true, // Capture audio

1920, // Width

1080, // Height

);

// Start screen capture

await screenSource.startCapture(config: config);

// Update local preview to show screen content

await updateLocalPreviewForScreenShare();

setState(() {

isScreenSharing = true;

});

_showSnackBar('Screen sharing started');

} catch (error) {

debugPrint('Error starting screen sharing: $error');

_showErrorDialog('Failed to start screen sharing: $error');

}

}

Future<void> stopScreenSharing() async {

try {

final screenSource = ZegoService.instance.screenCaptureSource;

if (screenSource != null) {

// Stop screen capture

await screenSource.stopCapture();

}

// Switch back to camera source

await ZegoExpressEngine.instance.setVideoSource(

ZegoVideoSourceType.Camera,

channel: ZegoPublishChannel.Main,

);

// Restore camera preview

await restoreCameraPreview();

setState(() {

isScreenSharing = false;

});

_showSnackBar('Screen sharing stopped');

} catch (error) {

debugPrint('Error stopping screen sharing: $error');

_showErrorDialog('Failed to stop screen sharing: $error');

}

}

Future<void> updateLocalPreviewForScreenShare() async {

try {

// Stop current preview

await ZegoExpressEngine.instance.stopPreview();

if (localViewID != null) {

await ZegoExpressEngine.instance.destroyCanvasView(localViewID!);

}

// Create new preview for screen content

final widget = await ZegoExpressEngine.instance.createCanvasView((viewID) {

localViewID = viewID;

final canvas = ZegoCanvas(viewID, viewMode: ZegoViewMode.AspectFit);

ZegoExpressEngine.instance.startPreview(canvas: canvas);

});

if (mounted) {

setState(() {

localVideoWidget = widget;

});

}

} catch (error) {

debugPrint('Error updating preview for screen share: $error');

}

}

Future<void> restoreCameraPreview() async {

try {

// Stop current preview

await ZegoExpressEngine.instance.stopPreview();

if (localViewID != null) {

await ZegoExpressEngine.instance.destroyCanvasView(localViewID!);

}

// Restart camera preview

await startLocalPreview();

} catch (error) {

debugPrint('Error restoring camera preview: $error');

}

}

// Implement other control methods

void toggleMicrophone() async {

try {

await ZegoExpressEngine.instance.muteMicrophone(!isMicMuted);

setState(() {

isMicMuted = !isMicMuted;

});

_showSnackBar(isMicMuted ? 'Microphone muted' : 'Microphone unmuted');

} catch (error) {

debugPrint('Error toggling microphone: $error');

}

}

void toggleCamera() async {

try {

await ZegoExpressEngine.instance.enableCamera(isCameraEnabled);

setState(() {

isCameraEnabled = !isCameraEnabled;

});

_showSnackBar(isCameraEnabled ? 'Camera enabled' : 'Camera disabled');

} catch (error) {

debugPrint('Error toggling camera: $error');

}

}

void endCall() async {

try {

// Stop screen sharing if active

if (isScreenSharing) {

await stopScreenSharing();

}

// Stop local preview

await ZegoExpressEngine.instance.stopPreview();

// Stop publishing

await ZegoExpressEngine.instance.stopPublishingStream();

// Leave room

await ZegoExpressEngine.instance.logoutRoom(widget.roomId);

// Clean up views

if (localViewID != null) {

await ZegoExpressEngine.instance.destroyCanvasView(localViewID!);

}

if (remoteViewID != null) {

await ZegoExpressEngine.instance.destroyCanvasView(remoteViewID!);

}

// Navigate back

if (mounted) {

Navigator.of(context).pop();

}

} catch (error) {

debugPrint('Error ending call: $error');

Navigator.of(context).pop();

}

}

@override

void dispose() {

// Clean up resources

endCall();

super.dispose();

}Step 8. iOS Broadcast Extension Setup (Optional)

For iOS cross-app screen sharing, configure a Broadcast Upload Extension that enables system-wide screen recording beyond just your app.

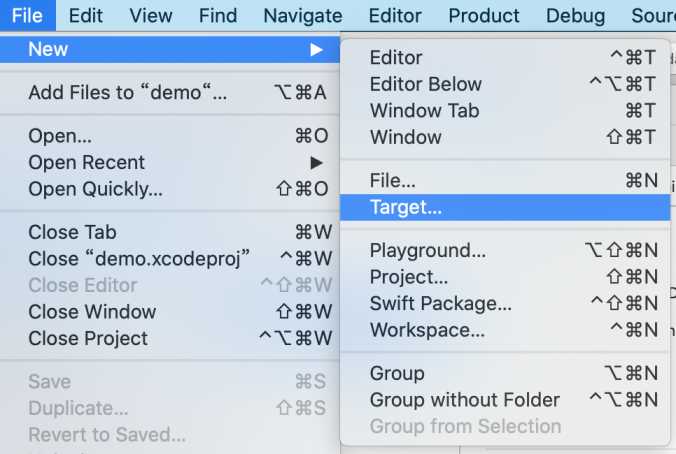

- Open your Flutter project’s iOS workspace in Xcode:

- open ios/Runner.xcworkspace

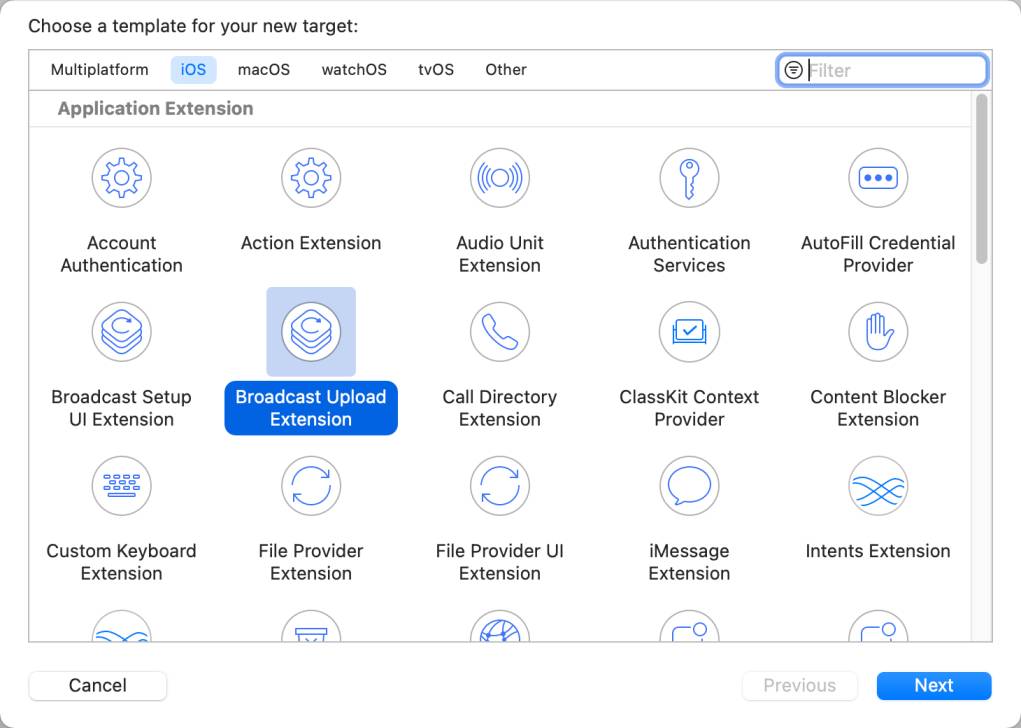

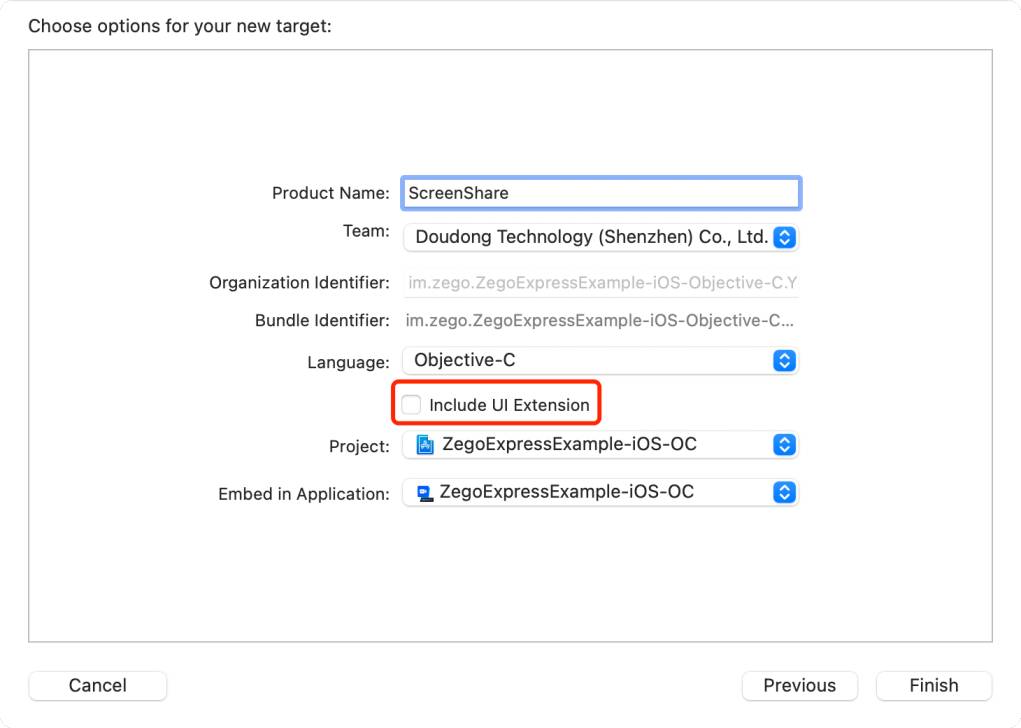

- Create a new Broadcast Upload Extension target:

In Xcode, select File → New → Target

Choose “Broadcast Upload Extension” from iOS templates

Set Product Name to “ScreenShareExtension”

Ensure “Include UI Extension” is unchecked

Click Finish

Configure the extension in ios/ScreenShareExtension/SampleHandler.swift:

import ReplayKit

import ZegoExpressEngine

class SampleHandler: RPBroadcastSampleHandler {

override func broadcastStarted(withSetupInfo setupInfo: [String : NSObject]?) {

// User has requested to start the broadcast

// Setup the broadcast session and initialize connection to main app

setupBroadcastSession()

}

override func broadcastPaused() {

// User has requested to pause the broadcast

pauseBroadcastSession()

}

override func broadcastResumed() {

// User has requested to resume the broadcast

resumeBroadcastSession()

}

override func broadcastFinished() {

// User has requested to finish the broadcast

finishBroadcastSession()

}

override func processSampleBuffer(_ sampleBuffer: CMSampleBuffer, with sampleBufferType: RPSampleBufferType) {

switch sampleBufferType {

case RPSampleBufferType.video:

// Handle video sample buffer

processVideoSampleBuffer(sampleBuffer)

case RPSampleBufferType.audioApp:

// Handle app audio sample buffer

processAudioSampleBuffer(sampleBuffer, type: .app)

case RPSampleBufferType.audioMic:

// Handle microphone audio sample buffer

processAudioSampleBuffer(sampleBuffer, type: .mic)

@unknown default:

break

}

}

private func setupBroadcastSession() {

// Initialize ZEGO ReplayKit extension

// This connects the extension to your main app

print("Broadcast session started")

}

private func pauseBroadcastSession() {

print("Broadcast session paused")

}

private func resumeBroadcastSession() {

print("Broadcast session resumed")

}

private func finishBroadcastSession() {

print("Broadcast session finished")

}

private func processVideoSampleBuffer(_ sampleBuffer: CMSampleBuffer) {

// Send video data to ZEGO engine through ReplayKit extension

// This enables system-wide screen sharing

}

private func processAudioSampleBuffer(_ sampleBuffer: CMSampleBuffer, type: AudioType) {

// Send audio data to ZEGO engine

// Supports both app audio and microphone audio

}

enum AudioType {

case app

case mic

}

}Update the extension’s Info.plist to configure broadcast settings:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>CFBundleDisplayName</key>

<string>Screen Share</string>

<key>NSExtension</key>

<dict>

<key>NSExtensionPointIdentifier</key>

<string>com.apple.broadcast-services-upload</string>

<key>NSExtensionPrincipalClass</key>

<string>SampleHandler</string>

<key>RPBroadcastProcessMode</key>

<string>RPBroadcastProcessModeSampleBuffer</string>

</dict>

</dict>

</plist>This extension enables iOS users to share their entire device screen, not just your app’s content.

Step 9. Event Handling and State Management

Implement comprehensive event handling for screen sharing events and manage application state transitions effectively.

Add screen sharing event handlers to your ZEGO service:

// Add to zego_service.dart

void setupScreenSharingEventHandlers() {

// Handle screen capture start events (Android only)

ZegoExpressEngine.onMobileScreenCaptureStart = () {

debugPrint('Screen capture started successfully');

_notifyScreenSharingStateChanged(true);

};

// Handle screen capture exceptions (Android only)

ZegoExpressEngine.onMobileScreenCaptureExceptionOccurred = (exceptionType) {

debugPrint('Screen capture exception: $exceptionType');

_handleScreenCaptureException(exceptionType);

};

// Handle video source state changes

ZegoExpressEngine.onVideoObjectSegmentationStateChanged = (state, channel, errorCode) {

debugPrint('Video source state changed: $state, error: $errorCode');

};

}

void _notifyScreenSharingStateChanged(bool isSharing) {

// Notify UI about screen sharing state changes

// This can be implemented using streams or callbacks

}

void _handleScreenCaptureException(ZegoScreenCaptureSourceExceptionType exceptionType) {

String errorMessage = 'Screen capture error occurred';

switch (exceptionType) {

case ZegoScreenCaptureSourceExceptionType.AuthDenied:

errorMessage = 'Screen recording permission denied';

break;

case ZegoScreenCaptureSourceExceptionType.SystemNotSupport:

errorMessage = 'Device does not support screen recording';

break;

case ZegoScreenCaptureSourceExceptionType.SourceNotSupport:

errorMessage = 'Screen capture source not supported';

break;

case ZegoScreenCaptureSourceExceptionType.Unknown:

default:

errorMessage = 'Unknown screen capture error';

break;

}

debugPrint('Screen capture exception: $errorMessage');

// Handle the error appropriately in your app

}

void clearEventHandlers() {

ZegoExpressEngine.onMobileScreenCaptureStart = null;

ZegoExpressEngine.onMobileScreenCaptureExceptionOccurred = null;

ZegoExpressEngine.onVideoObjectSegmentationStateChanged = null;

}Create a state management solution for screen sharing in lib/models/call_state.dart:

import 'package:flutter/foundation.dart';

class CallState extends ChangeNotifier {

bool _isCallActive = false;

bool _isScreenSharing = false;

bool _isMicMuted = false;

bool _isCameraEnabled = true;

List<String> _participants = [];

String? _currentStreamId;

// Getters

bool get isCallActive => _isCallActive;

bool get isScreenSharing => _isScreenSharing;

bool get isMicMuted => _isMicMuted;

bool get isCameraEnabled => _isCameraEnabled;

List<String> get participants => List.unmodifiable(_participants);

String? get currentStreamId => _currentStreamId;

// State updates

void updateCallState(bool isActive) {

if (_isCallActive != isActive) {

_isCallActive = isActive;

notifyListeners();

}

}

void updateScreenSharingState(bool isSharing) {

if (_isScreenSharing != isSharing) {

_isScreenSharing = isSharing;

notifyListeners();

}

}

void updateMicState(bool isMuted) {

if (_isMicMuted != isMuted) {

_isMicMuted = isMuted;

notifyListeners();

}

}

void updateCameraState(bool isEnabled) {

if (_isCameraEnabled != isEnabled) {

_isCameraEnabled = isEnabled;

notifyListeners();

}

}

void addParticipant(String userId) {

if (!_participants.contains(userId)) {

_participants.add(userId);

notifyListeners();

}

}

void removeParticipant(String userId) {

if (_participants.remove(userId)) {

notifyListeners();

}

}

void updateCurrentStream(String? streamId) {

if (_currentStreamId != streamId) {

_currentStreamId = streamId;

notifyListeners();

}

}

void reset() {

_isCallActive = false;

_isScreenSharing = false;

_isMicMuted = false;

_isCameraEnabled = true;

_participants.clear();

_currentStreamId = null;

notifyListeners();

}

}Step 10. Testing

Test the implementation by following these validation steps:

- Basic Video Call Testing: Install the app on two devices, enter the same Room ID with different User IDs, and verify video and audio communication works properly.

- Screen Sharing Validation: Join a call, tap the screen share button, grant permissions, and confirm that screen content appears on the remote device.

- Control Testing: Test microphone muting, camera toggling, and screen sharing start/stop during active calls.

- Permission Handling: Deny permissions initially, then verify the app handles permission requests gracefully and provides helpful feedback.

- Multi-platform Testing: Test on both iOS and Android devices to ensure cross-platform compatibility.

Conclusion

Screen sharing now works in your Flutter video chat app. Users can share their screens during calls and switch back to camera view easily. The feature works on both iOS and Android devices without extra coding.

This turns your video calling app into a tool for working together. Remote teams can collaborate better, and teachers can share content with students. The screen sharing works well even when internet connections are slow.

You built something that many Flutter developers find hard to do. Managing video sources and permissions across platforms is tricky, but your app handles this smoothly. Users get a professional experience that matches the best video calling apps.

FAQ

Q1: Can Flutter support screen sharing for both Android and iOS?

Yes, but it requires using platform channels to access native screen capture APIs separately on Android and iOS.

Q2: Does iOS allow full screen sharing in Flutter apps?

Not directly. iOS screen sharing typically uses ReplayKit, which has limitations and requires broadcast extensions.

Q3: What’s the best way to send screen data during a video call?

Use a video call SDK like ZEGOCLOUD’s, which supports custom video sources. This lets you capture the screen and send it as a video stream to other participants in the call.

Let’s Build APP Together

Start building with real-time video, voice & chat SDK for apps today!