Millions of comments and uploads are made in the fast-changing digital world. The content moderation process keeps this massive stream of user-generated content safe and within platform policy. Besides, it enables users to communicate with confidence and safeguard the reputations of brands. Hence, if you want to learn more about content moderation, this guide is for you.

What is Content Moderation?

Content moderation is the process of overseeing and assessing user-created content on online platforms. It guarantees compliance with local regulations and legal standards. In that regard, it recognizes, manages, and bans dangerous content to ensure a secure internet world.

The platform employs both human and machine moderation. Based on these, successful content moderation services safeguard users, brand reputation, and ward off legal tussles by blocking content like nudity.

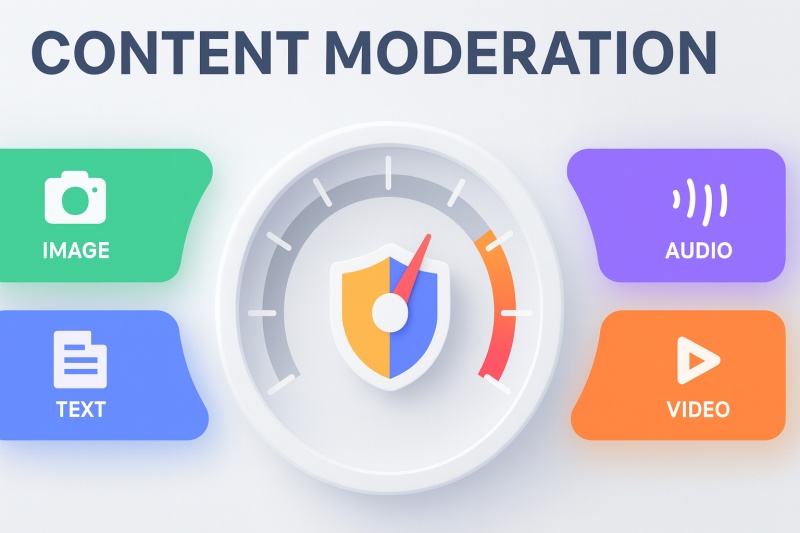

Types of Content Moderation

When people think about content moderation, they often imagine text-based messages. However, user-generated content today also includes images, videos, and audio, each requiring different moderation approaches. Let’s take a closer look at how each type works and the technologies behind them.

1. Text Moderation

Text remains one of the most common ways users communicate online through forums, posts, comments, private messages, and group chats. AI and machine learning now make it possible to automatically analyze large volumes of text to detect policy violations, hate speech, or offensive language. These systems can scan across different languages, styles, and contexts based on platform rules, ensuring fast and scalable text moderation.

2. Image Moderation

Detecting inappropriate images may sound simple, but it involves many subtle challenges. For example, distinguishing between explicit photos and artistic works requires contextual understanding, and cultural differences can influence what is considered inappropriate. Effective image moderation combines AI-powered computer vision with human review and user reporting. Advanced models now integrate Optical Character Recognition (OCR) to identify harmful text or symbols embedded within images, such as hate speech or personal data.

3. Video Moderation

Video content is more complex to review, as it can include inappropriate visuals, audio, or text over long durations. A single video might contain only a few harmful frames, making manual review time-consuming. Modern AI-driven systems can automatically scan frames, transcribe subtitles, and analyze audio to identify policy-violating material. This approach helps protect users from harmful or disturbing user-generated videos while maintaining community trust and brand integrity.

4. Audio Moderation

Audio moderation focuses on monitoring voice messages, live streams, and podcasts to detect harassment, hate speech, or sensitive information. Technologies such as Automatic Speech Recognition (ASR) and Natural Language Processing (NLP) convert speech into text for analysis, while human reviewers handle cases that require deeper contextual understanding. For example, AI might miss sarcasm or mocking tones that human reviewers can interpret correctly. This combination ensures a safe and respectful audio communication environment.

5. Automated Moderation

Modern moderation systems integrate AI tools across all content types—text, image, video, and audio—to automatically flag, filter, and block inappropriate material. These automated systems enhance efficiency and scalability, reducing manual workload while maintaining high standards of trust and safety for users.

How Does Content Moderation Work in Real-Time Communication Platforms

While looking for content moderation solutions, many users wonder how this process works. Thus, this section explains the review and filter process in detail to make the best use of it.

- Content Filter: Artificial intelligence analyzes messages, images, and audio instantly. Moreover, hate speech, or harmful imagery, is detected by natural language processing, computer vision, and contextual analysis.

- Automated Actions: This is followed by blocking or flagging of content that is considered high risk. On the other hand, borderline content is sent to the moderators, where artificial intelligence also keeps track of repeat offenders.

- Escalation to Human Review: In case artificial intelligence is unclear, moderators review the content manually and decide on what to do.

Best Practices for Implementing Content Moderation

In the case of social media content moderation, it is essential to follow particular practices that would provide a safe environment on the internet. Therefore, this section lists some of these practices that help the platform deal with user-generated content.

- Make Simple Community Rules: Spell out the acceptable and unacceptable material. In addition, clearly display the guidelines and make them obligatory to ensure awareness of the consequences of non-compliance.

- Apply Multi Layered Moderation: Use AI-based automation with human moderation, with AI efficient in filtering content, but human judgment is useful in solving cases that are context sensitive. One can use this hybrid system to effectively handle large volumes of material with precision.

- Empower Users to Report Violations: Allow users to flag inappropriate content to expand moderation reach. However, explain the reporting clearly and review the lagged items quickly to prevent the offensive content from escalating.

- Continuously Monitor, Review, and Update Methods: Your moderation system must evolve, and for that, track the performance, update rules, and reduce bias. Therefore, the ongoing optimization of AI filters and human training will help to sustain effective moderation.

- Support and Train Moderators: The moderators need extensive training regarding policies, cultural sensitivity, and mental health support. In this regard, you should update them about new trends and create a healthy, collaborative work environment.

Challenges in Content Moderation

As you know, why is content moderation important for user-generated campaigns? This term has its challenges, too. So, if you want to know about most of them, review the listed points in detail:

- Content Volume: Once the manual review process becomes too heavy on the human operators, organizations use artificial intelligence moderation strategies. However, AI systems are overloaded or unable to identify some content due to the large volume of content.

- Context and Nuance: AI moderation tools are not always able to understand tone, sarcasm, or cultural slang. As a result, they give false positives or false negatives.

- New Ways: Those who post harmful content continually devise new methods to evade detection. That is why companies need to update moderation systems continuously to identify and counter new strategies.

- Fairness: AI moderation systems train on data that humans make, which can cause biased results. This may occasionally lead to unfair treatment of people.

- Psychological Impact: Humans who review flagged content often face disturbing images or videos. This can hurt their mental health and lead to stress.

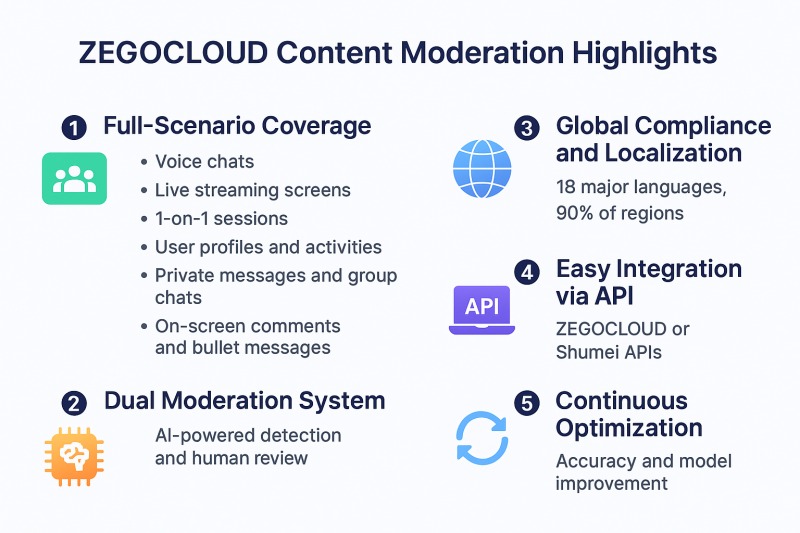

ZEGOCLOUD: AI-Powered Global Content Moderation for Real-Time Communication

ZEGOCLOUD provides a one-stop, full-spectrum content moderation solution designed for real-time communication scenarios such as live streaming, 1-on-1 chats, voice rooms, private messaging, user profiles, comments, and community feeds. The system combines advanced AI-driven moderation with human review, ensuring both scalability and contextual accuracy across all content types.

ZEGOCLOUD partners with global providers like Shumei Technology to deliver integrated API solutions that support seamless moderation of text, image, audio, and video content. The platform’s intelligent moderation engine automatically detects and filters violations such as explicit imagery, political sensitivity, hate speech, violence, and spam. It also includes business-level capabilities like face and logo recognition to assist with brand safety and compliance.

Built upon years of industry experience and a deep understanding of global content policies, ZEGOCLOUD has developed a comprehensive moderation label system covering more than 1,800 content risk categories across 18 major languages, with localized compliance standards for over 30 countries. This includes region-specific filters for religious sensitivity, political context, and racial representation, helping businesses remain compliant with local regulations while maintaining user trust.

To deliver higher accuracy, ZEGOCLOUD’s moderation solution combines machine intelligence with human expertise. The AI moderation platform efficiently handles massive data in real time, while professional reviewers ensure nuanced judgment in complex or ambiguous cases. This hybrid approach not only improves detection accuracy but also optimizes review efficiency through continuous feedback and model retraining.

ZEGOCLOUD’s full-stack solution enables developers to integrate moderation directly into their apps via simple APIs, providing automated detection, configurable review workflows, and analytics dashboards. With global coverage, multi-language support, and 24/7 reliability, ZEGOCLOUD helps platforms maintain a safe, compliant, and positive user experience across regions.

Conclusion

In conclusion, content moderation ensures online platforms remain safe, respectful, and compliant with community rules and laws. Therefore, from social media to live streaming, following best practices protects users and builds trust. However, for scalable, real-time moderation solutions, ZEGOCLOUD provides a reliable and powerful option.

FAQ

Q1: What are the common types of content moderation?

Content moderation typically covers text, image, video, audio, and live stream content. Moderation can be automated, manual, or a hybrid of both. In most modern platforms, AI-powered tools handle large-scale detection, while human reviewers ensure accuracy and proper context.

Q2: Why is real-time content moderation crucial for RTC applications?

In real-time environments like live streams or chat rooms, inappropriate content can appear and spread instantly, potentially harming users and damaging brand reputation. AI-driven real-time moderation helps detect and filter harmful text, images, and audio before they reach a wider audience, ensuring user safety and platform credibility.

Q3: What does content moderation for streaming media include?

Streaming moderation covers both audio and video content.

- Audio moderation: Uses metadata and AI analysis to identify harmful language or sensitive topics, either by reviewing uploaded audio files or real-time streams.

- Video moderation: Involves capturing frames at intervals and analyzing them as images, with options for both uploaded videos and live streams.

Q4: How does AI content moderation work in real-time chat environments?

When a user sends a message, it is first processed by the AI moderation service, which uses machine learning models to classify the content in real time. Based on the analysis, the system can automatically block, flag for review, or warn the user depending on the platform’s policy. This allows developers to maintain a safe communication experience without disrupting real-time interactions.

Let’s Build APP Together

Start building with real-time video, voice & chat SDK for apps today!