Build an AI Voice Agent can seem harder than it looks. Many developers spend weeks combining speech recognition, natural language processing, and voice synthesis just to make basic voice features work. Each service comes with its own authentication steps, audio handling challenges, and hours of debugging, which quickly makes the process overwhelming.

Instead of dealing with all that complexity, imagine deploying a production-ready AI voice agent that understands speech, processes requests intelligently, and responds with natural, human-like voices in just minutes.

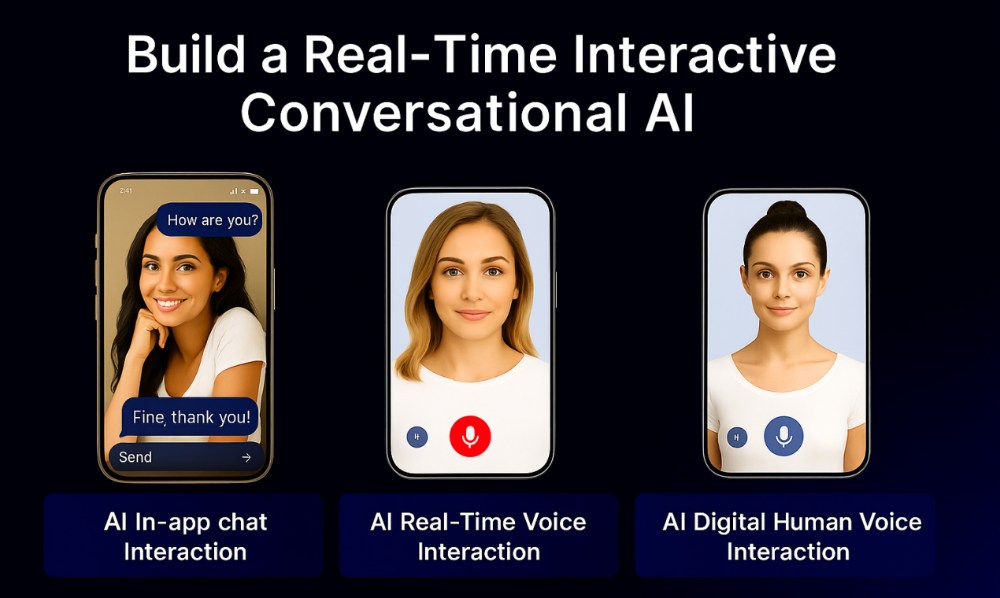

ZEGOCLOUD conversational AI provides a unified platform that manages the entire voice pipeline for you. There is no need for audio engineering, service orchestration, or infrastructure maintenance. With simple APIs, your application can turn voice input into intelligent, real-time responses.

This tutorial will guide you through the process. By the end, you will have built an AI voice agent that feels natural to interact with, delivers contextual replies instantly, and enhances user experiences across different scenarios.

How to Build an AI-Powered Voice Agent in 10 Minutes

ZEGOCLOUD built voice agents on top of their proven communication platform. The same technology that powers millions of voice calls now includes AI agents with speech recognition, language processing, and voice synthesis built right in.

This means you get years of audio optimization and network reliability that already work in production. Instead of experimental voice AI services, you’re using enterprise-grade communication tools with intelligent integrations now.

Your voice agent runs on established WebRTC protocols, tested audio codecs, and streaming technology that handles critical business communications worldwide. You get AI conversation capabilities through a foundation that already works reliably at scale.

Prerequisites

Before building your AI voice agent, ensure you have these components ready:

- ZEGOCLOUD developer account with valid AppID and ServerSecret – Sign up

- Any compatible LLM API key for intelligent conversation processing and contextual response generation.

- Node.js 18+ installed locally with npm for package management and development servers.

- Testing environment with microphone access since voice agents require real audio hardware capabilities.

- Basic familiarity with React hooks and Express.js patterns for following the implementation steps.

1. Project Setup and Configuration

Begin by establishing the project structure that separates voice processing logic from user interface concerns. This architecture enables independent development and deployment of voice agent components.

mkdir ai-voice-agent

cd ai-voice-agent

mkdir voice-serverNavigate to the server directory and install the core dependencies for voice agent functionality:

cd voice-server

npm init -y

npm install express cors dotenv axios crypto

npm install --save-dev @types/express @types/cors @types/node typescript tsx nodemonThese packages provide HTTP server capabilities, cross-origin request handling, environment configuration, external API communication, and cryptographic functions for secure token generation.

Configure your environment variables by creating voice-server/.env:

# ZEGOCLOUD Voice Configuration

ZEGO_APP_ID=your_app_id_here

ZEGO_SERVER_SECRET=your_server_secret_here

ZEGO_API_BASE_URL=https://aigc-aiagent-api.zegotech.cn

# AI Language Model Settings

LLM_URL=https://api.openai.com/v1/chat/completions

LLM_API_KEY=your_openai_api_key_here

LLM_MODEL=gpt-4o-mini

# Voice Agent Server

PORT=3001

NODE_ENV=development2. Secure Voice Authentication System

Implement the authentication infrastructure that generates secure tokens for voice agent sessions.

This system ensures only authorized users can access voice processing capabilities.

Create the token generation utility at voice-server/auth/tokenGenerator.js:

const crypto = require('crypto');

class VoiceTokenGenerator {

constructor(appId, serverSecret) {

this.appId = parseInt(appId);

this.serverSecret = serverSecret;

}

generateSecureToken(userId, sessionDuration = 7200) {

if (!userId || typeof userId !== 'string') {

throw new Error('Valid user ID required for voice session');

}

const currentTimestamp = Math.floor(Date.now() / 1000);

const tokenPayload = {

app_id: this.appId,

user_id: userId,

nonce: this.generateRandomNonce(),

ctime: currentTimestamp,

expire: currentTimestamp + sessionDuration,

payload: JSON.stringify({ voiceAgent: true })

};

return this.encryptTokenPayload(tokenPayload);

}

generateRandomNonce() {

return Math.floor(Math.random() * (Math.pow(2, 31) - (-Math.pow(2, 31)) + 1)) + (-Math.pow(2, 31));

}

encryptTokenPayload(payload) {

const VERSION_FLAG = '04';

const plaintext = JSON.stringify(payload);

// AES-256-GCM encryption for voice session security

const key = Buffer.from(this.serverSecret, 'utf8');

const nonce = crypto.randomBytes(12);

const cipher = crypto.createCipheriv('aes-256-gcm', key, nonce);

cipher.setAutoPadding(true);

let encrypted = cipher.update(plaintext, 'utf8');

encrypted = Buffer.concat([encrypted, cipher.final()]);

const authTag = cipher.getAuthTag();

// Construct binary token structure

const expireBuffer = Buffer.alloc(8);

expireBuffer.writeBigUInt64BE(BigInt(payload.expire), 0);

const nonceLength = Buffer.alloc(2);

nonceLength.writeUInt16BE(nonce.length, 0);

const encryptedLength = Buffer.alloc(2);

encryptedLength.writeUInt16BE(encrypted.length + authTag.length, 0);

const encryptionMode = Buffer.alloc(1);

encryptionMode.writeUInt8(1, 0); // GCM mode

const tokenBuffer = Buffer.concat([

expireBuffer,

nonceLength,

nonce,

encryptedLength,

encrypted,

authTag,

encryptionMode

]);

return VERSION_FLAG + tokenBuffer.toString('base64');

}

}

module.exports = VoiceTokenGenerator;3. Voice Agent Server Implementation

Build the core server that manages voice agent lifecycle, handles audio streaming connections, and coordinates AI response generation. Create the main server file at voice-server/src/server.js:

const express = require('express');

const cors = require('cors');

const axios = require('axios');

require('dotenv').config();

const VoiceTokenGenerator = require('../auth/tokenGenerator');

const app = express();

// Configure middleware for voice agent requests

app.use(cors({

origin: ['http://localhost:5173', 'http://localhost:3000'],

credentials: true,

methods: ['GET', 'POST', 'OPTIONS'],

allowedHeaders: ['Content-Type', 'Authorization']

}));

app.use(express.json({ limit: '10mb' }));

app.use(express.urlencoded({ extended: true }));

// Environment validation and configuration

const requiredEnvVars = [

'ZEGO_APP_ID',

'ZEGO_SERVER_SECRET',

'ZEGO_API_BASE_URL',

'LLM_API_KEY'

];

const missingVars = requiredEnvVars.filter(varName => !process.env[varName]);

if (missingVars.length > 0) {

console.error('❌ Missing critical environment variables:', missingVars);

process.exit(1);

}

const {

ZEGO_APP_ID,

ZEGO_SERVER_SECRET,

ZEGO_API_BASE_URL,

LLM_URL,

LLM_API_KEY,

LLM_MODEL,

PORT = 3001

} = process.env;

// Initialize voice token generator

const tokenGenerator = new VoiceTokenGenerator(ZEGO_APP_ID, ZEGO_SERVER_SECRET);

// Health monitoring for voice agent services

app.get('/health', (req, res) => {

res.json({

status: 'operational',

service: 'voice-agent',

timestamp: new Date().toISOString(),

capabilities: {

voiceProcessing: true,

aiIntegration: true,

realTimeAudio: true

}

});

});

// Voice session token generation

app.get('/api/voice/token', (req, res) => {

try {

const { userId } = req.query;

if (!userId) {

return res.status(400).json({

success: false,

error: 'User identifier required for voice session authentication'

});

}

const voiceToken = tokenGenerator.generateSecureToken(userId, 7200);

console.log(`🔐 Generated voice token for user: ${userId}`);

res.json({

success: true,

token: voiceToken,

expiresIn: 7200,

userId: userId

});

} catch (error) {

console.error('❌ Voice token generation error:', error.message);

res.status(500).json({

success: false,

error: 'Voice authentication token generation failed'

});

}

});

// Voice agent initialization

app.post('/api/voice/initialize', async (req, res) => {

try {

const { roomId, userId, voiceProfile = 'default' } = req.body;

if (!roomId || !userId) {

return res.status(400).json({

success: false,

error: 'Room ID and User ID required for voice agent initialization'

});

}

console.log(`🎤 Initializing voice agent for room: ${roomId}, user: ${userId}`);

const voiceAgentConfig = {

app_id: parseInt(ZEGO_APP_ID),

room_id: roomId,

user_id: userId,

user_stream_id: `${userId}_voice_stream`,

ai_agent_config: {

llm_config: {

url: LLM_URL,

api_key: LLM_API_KEY,

model: LLM_MODEL,

context: [

{

role: "system",

content: "You are an intelligent voice assistant. Respond naturally and conversationally. Keep responses concise and engaging for voice interaction. Be helpful, friendly, and adaptive to the user's speaking style."

}

],

temperature: 0.7,

max_tokens: 150

},

tts_config: {

provider: "elevenlabs",

voice_id: "pNInz6obpgDQGcFmaJgB",

model: "eleven_turbo_v2_5",

stability: 0.5,

similarity_boost: 0.75

},

asr_config: {

provider: "deepgram",

language: "en",

model: "nova-2",

smart_format: true,

punctuate: true

}

}

};

const response = await axios.post(

`${ZEGO_API_BASE_URL}/v1/ai_agent/start`,

voiceAgentConfig,

{

headers: { 'Content-Type': 'application/json' },

timeout: 30000

}

);

if (response.data?.data?.ai_agent_instance_id) {

const agentInstanceId = response.data.data.ai_agent_instance_id;

console.log(`✅ Voice agent initialized successfully: ${agentInstanceId}`);

res.json({

success: true,

agentId: agentInstanceId,

roomId: roomId,

userId: userId,

voiceProfile: voiceProfile

});

} else {

throw new Error('Invalid response from voice agent service');

}

} catch (error) {

console.error('❌ Voice agent initialization failed:', error.response?.data || error.message);

res.status(500).json({

success: false,

error: 'Voice agent initialization failed',

details: error.response?.data?.message || error.message

});

}

});

// Voice message processing

app.post('/api/voice/message', async (req, res) => {

try {

const { agentId, message, messageType = 'text' } = req.body;

if (!agentId || !message) {

return res.status(400).json({

success: false,

error: 'Agent ID and message content required'

});

}

console.log(`💬 Processing voice message for agent ${agentId}: ${message.substring(0, 100)}...`);

const messagePayload = {

ai_agent_instance_id: agentId,

messages: [

{

role: "user",

content: message,

type: messageType

}

]

};

await axios.post(

`${ZEGO_API_BASE_URL}/v1/ai_agent/chat`,

messagePayload,

{

headers: { 'Content-Type': 'application/json' },

timeout: 30000

}

);

res.json({

success: true,

message: 'Voice message processed successfully',

agentId: agentId

});

} catch (error) {

console.error('❌ Voice message processing failed:', error.response?.data || error.message);

res.status(500).json({

success: false,

error: 'Voice message processing failed'

});

}

});

// Voice agent session termination

app.post('/api/voice/terminate', async (req, res) => {

try {

const { agentId } = req.body;

if (!agentId) {

return res.status(400).json({

success: false,

error: 'Agent ID required for session termination'

});

}

console.log(`🛑 Terminating voice agent session: ${agentId}`);

await axios.post(

`${ZEGO_API_BASE_URL}/v1/ai_agent/stop`,

{ ai_agent_instance_id: agentId },

{

headers: { 'Content-Type': 'application/json' },

timeout: 15000

}

);

res.json({

success: true,

message: 'Voice agent session terminated successfully'

});

} catch (error) {

console.error('❌ Voice agent termination failed:', error.response?.data || error.message);

res.status(500).json({

success: false,

error: 'Voice agent session termination failed'

});

}

});

// Error handling middleware

app.use((error, req, res, next) => {

console.error('Voice agent server error:', error);

res.status(500).json({

success: false,

error: 'Internal voice agent server error'

});

});

app.listen(PORT, () => {

console.log(`🎤 Voice Agent server running on port ${PORT}`);

console.log(`🔗 API endpoint: http://localhost:${PORT}`);

console.log(`🏥 Health check: http://localhost:${PORT}/health`);

});4. Frontend Voice Interface with Vite

Set up the modern React frontend using Vite for rapid development and optimal voice agent user experience.

Navigate back to the project root and create the voice client:

cd ..

npm create vite@latest voice-client -- --template react-ts

cd voice-clientInstall the essential packages for voice agent functionality:

npm install axios framer-motion lucide-react zego-express-engine-webrtc

npm install @types/nodeConfigure the development environment in voice-client/.env:

VITE_ZEGO_APP_ID=your_app_id_here

VITE_ZEGO_SERVER=wss://webliveroom-api.zegocloud.com/ws

VITE_VOICE_API_URL=http://localhost:3001

Update the Vite configuration at voice-client/vite.config.ts:

typescriptimport { defineConfig } from 'vite'

import react from '@vitejs/plugin-react'

export default defineConfig({

plugins: [react()],

define: {

global: 'globalThis',

},

server: {

port: 5173,

host: true,

},

optimizeDeps: {

include: ['zego-express-engine-webrtc'],

},

build: {

rollupOptions: {

output: {

manualChunks: {

vendor: ['react', 'react-dom'],

zego: ['zego-express-engine-webrtc']

}

}

}

}

})This configuration optimizes the ZEGOCLOUD SDK loading and ensures proper build output for voice agent deployment.

5. Voice Agent Data Types and Interfaces

Define the TypeScript interfaces that structure voice agent data flow and ensure type safety across components.

Create voice-client/src/types/voice.ts:

export interface VoiceMessage {

id: string

content: string

speaker: 'user' | 'agent'

timestamp: number

type: 'voice' | 'text'

transcript?: string

duration?: number

confidence?: number

}

export interface VoiceSession {

sessionId: string

roomId: string

userId: string

agentId?: string

isActive: boolean

startTime: number

lastActivity: number

}

export interface VoiceAgentStatus {

state: 'idle' | 'listening' | 'processing' | 'speaking' | 'error'

currentTranscript: string

isRecording: boolean

audioLevel: number

connectionQuality: 'excellent' | 'good' | 'fair' | 'poor'

}

export interface VoiceConfiguration {

language: string

voiceProfile: string

speechRate: number

volume: number

noiseReduction: boolean

autoGainControl: boolean

}

export interface AudioStreamInfo {

streamId: string

userId: string

isActive: boolean

audioCodec: string

bitrate: number

sampleRate: number

}6. ZEGOCLOUD Voice Service Integration

Implement the comprehensive voice service that manages real-time audio streaming, message handling, and voice agent coordination.

Create voice-client/src/services/voiceService.ts with the codes below:

import { ZegoExpressEngine } from 'zego-express-engine-webrtc'

import type { VoiceMessage, VoiceSession, VoiceAgentStatus, AudioStreamInfo } from '../types/voice'

export class VoiceAgentService {

private static instance: VoiceAgentService

private zegoEngine: ZegoExpressEngine | null = null

private currentSession: VoiceSession | null = null

private localAudioStream: MediaStream | null = null

private messageHandlers: Map<string, (message: any) => void> = new Map()

private statusListeners: Set<(status: VoiceAgentStatus) => void> = new Set()

private isEngineInitialized = false

static getInstance(): VoiceAgentService {

if (!VoiceAgentService.instance) {

VoiceAgentService.instance = new VoiceAgentService()

}

return VoiceAgentService.instance

}

async initializeVoiceEngine(appId: string, serverUrl: string): Promise<boolean> {

if (this.isEngineInitialized) {

console.log('Voice engine already initialized')

return true

}

try {

this.zegoEngine = new ZegoExpressEngine(parseInt(appId), serverUrl)

this.setupVoiceEventHandlers()

this.isEngineInitialized = true

console.log('✅ Voice engine initialized successfully')

return true

} catch (error) {

console.error('❌ Voice engine initialization failed:', error)

return false

}

}

private setupVoiceEventHandlers(): void {

if (!this.zegoEngine) return

// Handle voice messages from AI agent

this.zegoEngine.on('recvExperimentalAPI', (result: any) => {

if (result.method === 'onRecvRoomChannelMessage') {

try {

const messageData = JSON.parse(result.content.msgContent)

this.processVoiceMessage(messageData)

} catch (error) {

console.error('Failed to parse voice message:', error)

}

}

})

// Monitor audio stream changes

this.zegoEngine.on('roomStreamUpdate', async (roomId: string, updateType: string, streamList: any[]) => {

console.log(`Audio stream update in room ${roomId}:`, updateType, streamList.length)

if (updateType === 'ADD') {

for (const stream of streamList) {

if (this.isAgentAudioStream(stream.streamID)) {

await this.handleAgentAudioStream(stream)

}

}

} else if (updateType === 'DELETE') {

this.handleStreamDisconnection(streamList)

}

})

// Track connection quality for voice optimization

this.zegoEngine.on('networkQuality', (userId: string, upstreamQuality: number, downstreamQuality: number) => {

this.updateConnectionQuality(upstreamQuality, downstreamQuality)

})

// Handle room connection status

this.zegoEngine.on('roomStateChanged', (roomId: string, reason: string, errorCode: number) => {

console.log(`Voice room state changed: ${reason}, error: ${errorCode}`)

if (errorCode !== 0) {

this.notifyStatusChange({

state: 'error',

currentTranscript: '',

isRecording: false,

audioLevel: 0,

connectionQuality: 'poor'

})

}

})

}

private processVoiceMessage(messageData: any): void {

const { Cmd, Data } = messageData

if (Cmd === 3) { // User voice input processing

this.handleUserVoiceInput(Data)

} else if (Cmd === 4) { // AI agent response

this.handleAgentResponse(Data)

}

}

private handleUserVoiceInput(data: any): void {

const { Text: transcript, EndFlag, MessageId, Confidence } = data

if (transcript?.trim()) {

this.notifyStatusChange({

state: 'listening',

currentTranscript: transcript,

isRecording: true,

audioLevel: Confidence || 0.8,

connectionQuality: 'good'

})

if (EndFlag) {

const voiceMessage: VoiceMessage = {

id: MessageId || `voice_${Date.now()}`,

content: transcript.trim(),

speaker: 'user',

timestamp: Date.now(),

type: 'voice',

transcript: transcript.trim(),

confidence: Confidence

}

this.broadcastMessage('userVoiceInput', voiceMessage)

this.notifyStatusChange({

state: 'processing',

currentTranscript: '',

isRecording: false,

audioLevel: 0,

connectionQuality: 'good'

})

}

}

}

private handleAgentResponse(data: any): void {

const { Text: responseText, MessageId, EndFlag } = data

if (responseText && MessageId) {

if (EndFlag) {

const agentMessage: VoiceMessage = {

id: MessageId,

content: responseText,

speaker: 'agent',

timestamp: Date.now(),

type: 'voice'

}

this.broadcastMessage('agentResponse', agentMessage)

this.notifyStatusChange({

state: 'idle',

currentTranscript: '',

isRecording: false,

audioLevel: 0,

connectionQuality: 'good'

})

} else {

this.notifyStatusChange({

state: 'speaking',

currentTranscript: responseText,

isRecording: false,

audioLevel: 0.7,

connectionQuality: 'good'

})

}

}

}

private isAgentAudioStream(streamId: string): boolean {

return streamId.includes('agent') ||

(this.currentSession && !streamId.includes(this.currentSession.userId))

}

private async handleAgentAudioStream(stream: any): Promise<void> {

try {

const mediaStream = await this.zegoEngine!.startPlayingStream(stream.streamID)

if (mediaStream) {

const audioElement = document.getElementById('voice-agent-audio') as HTMLAudioElement

if (audioElement) {

audioElement.srcObject = mediaStream

audioElement.volume = 0.8

await audioElement.play()

console.log('✅ Agent audio stream connected')

}

}

} catch (error) {

console.error('❌ Failed to play agent audio stream:', error)

}

}

private handleStreamDisconnection(streamList: any[]): void {

console.log('Audio streams disconnected:', streamList.length)

const audioElement = document.getElementById('voice-agent-audio') as HTMLAudioElement

if (audioElement) {

audioElement.srcObject = null

}

}

private updateConnectionQuality(upstream: number, downstream: number): void {

const avgQuality = (upstream + downstream) / 2

let quality: 'excellent' | 'good' | 'fair' | 'poor'

if (avgQuality <= 1) quality = 'excellent'

else if (avgQuality <= 2) quality = 'good'

else if (avgQuality <= 3) quality = 'fair'

else quality = 'poor'

// Notify about quality changes for UI updates

this.statusListeners.forEach(listener => {

listener({

state: 'idle',

currentTranscript: '',

isRecording: false,

audioLevel: 0,

connectionQuality: quality

})

})

}

async joinVoiceSession(roomId: string, userId: string, token: string): Promise<boolean> {

if (!this.zegoEngine) {

console.error('Voice engine not initialized')

return false

}

try {

// Join the voice room

await this.zegoEngine.loginRoom(roomId, token, {

userID: userId,

userName: `VoiceUser_${userId}`

})

// Enable voice message reception

this.zegoEngine.callExperimentalAPI({

method: 'onRecvRoomChannelMessage',

params: {}

})

// Create and publish audio stream

const audioStream = await this.zegoEngine.createZegoStream({

camera: { video: false, audio: true }

})

if (audioStream) {

this.localAudioStream = audioStream

await this.zegoEngine.startPublishingStream(`${userId}_voice_stream`, audioStream)

}

this.currentSession = {

sessionId: `session_${Date.now()}`,

roomId,

userId,

isActive: true,

startTime: Date.now(),

lastActivity: Date.now()

}

console.log('✅ Voice session joined successfully')

return true

} catch (error) {

console.error('❌ Failed to join voice session:', error)

return false

}

}

async enableMicrophone(enabled: boolean): Promise<boolean> {

if (!this.localAudioStream) {

console.warn('No audio stream available for microphone control')

return false

}

try {

const audioTrack = this.localAudioStream.getAudioTracks()[0]

if (audioTrack) {

audioTrack.enabled = enabled

console.log(`🎤 Microphone ${enabled ? 'enabled' : 'disabled'}`)

return true

}

return false

} catch (error) {

console.error('❌ Failed to control microphone:', error)

return false

}

}

async leaveVoiceSession(): Promise<void> {

if (!this.zegoEngine || !this.currentSession) {

console.log('No active voice session to leave')

return

}

try {

// Stop local audio stream

if (this.localAudioStream && this.currentSession.userId) {

await this.zegoEngine.stopPublishingStream(`${this.currentSession.userId}_voice_stream`)

this.zegoEngine.destroyStream(this.localAudioStream)

this.localAudioStream = null

}

// Leave the room

await this.zegoEngine.logoutRoom()

// Clear session data

this.currentSession = null

console.log('✅ Voice session ended successfully')

} catch (error) {

console.error('❌ Failed to leave voice session properly:', error)

} finally {

this.currentSession = null

this.localAudioStream = null

}

}

// Message handling system

onMessage(type: string, handler: (message: any) => void): void {

this.messageHandlers.set(type, handler)

}

private broadcastMessage(type: string, message: any): void {

const handler = this.messageHandlers.get(type)

if (handler) {

handler(message)

}

}

// Status monitoring system

onStatusChange(listener: (status: VoiceAgentStatus) => void): void {

this.statusListeners.add(listener)

}

private notifyStatusChange(status: VoiceAgentStatus): void {

this.statusListeners.forEach(listener => listener(status))

}

// Cleanup and resource management

destroy(): void {

this.leaveVoiceSession()

this.messageHandlers.clear()

this.statusListeners.clear()

if (this.zegoEngine) {

this.zegoEngine = null

this.isEngineInitialized = false

}

}

// Session information getters

getCurrentSession(): VoiceSession | null {

return this.currentSession

}

isSessionActive(): boolean {

return this.currentSession?.isActive ?? false

}

}This service provides comprehensive voice agent functionality with real-time audio processing, message handling, and connection management.

7. Voice Agent API Service

Create the API communication layer that connects the frontend voice interface with the backend voice processing server.

Build voice-client/src/services/apiService.ts:

import axios, { AxiosInstance, AxiosResponse } from 'axios'

interface ApiResponse<T = any> {

success: boolean

data?: T

error?: string

message?: string

}

interface VoiceTokenResponse {

token: string

expiresIn: number

userId: string

}

interface VoiceInitResponse {

agentId: string

roomId: string

userId: string

voiceProfile: string

}

export class VoiceApiService {

private static instance: VoiceApiService

private apiClient: AxiosInstance

constructor() {

const baseURL = import.meta.env.VITE_VOICE_API_URL || 'http://localhost:3001'

this.apiClient = axios.create({

baseURL,

timeout: 30000,

headers: {

'Content-Type': 'application/json'

}

})

this.setupInterceptors()

}

static getInstance(): VoiceApiService {

if (!VoiceApiService.instance) {

VoiceApiService.instance = new VoiceApiService()

}

return VoiceApiService.instance

}

private setupInterceptors(): void {

// Request interceptor for logging

this.apiClient.interceptors.request.use(

(config) => {

console.log(`🌐 Voice API Request: ${config.method?.toUpperCase()} ${config.url}`)

return config

},

(error) => {

console.error('❌ Voice API Request Error:', error)

return Promise.reject(error)

}

)

// Response interceptor for standardized handling

this.apiClient.interceptors.response.use(

(response: AxiosResponse) => {

console.log(`✅ Voice API Response: ${response.status} ${response.config.url}`)

return response

},

(error) => {

console.error('❌ Voice API Response Error:', {

status: error.response?.status,

message: error.response?.data?.error || error.message,

url: error.config?.url

})

return Promise.reject(error)

}

)

}

async checkHealth(): Promise<boolean> {

try {

const response = await this.apiClient.get('/health')

return response.data.status === 'operational'

} catch (error) {

console.error('Voice API health check failed:', error)

return false

}

}

async getVoiceToken(userId: string): Promise<VoiceTokenResponse> {

try {

if (!userId || typeof userId !== 'string') {

throw new Error('Valid user ID required for voice token')

}

const response = await this.apiClient.get(`/api/voice/token?userId=${encodeURIComponent(userId)}`)

if (!response.data.success || !response.data.token) {

throw new Error('Invalid token response from voice service')

}

return {

token: response.data.token,

expiresIn: response.data.expiresIn,

userId: response.data.userId

}

} catch (error: any) {

const errorMessage = error.response?.data?.error || error.message || 'Voice token generation failed'

throw new Error(`Voice authentication failed: ${errorMessage}`)

}

}

async initializeVoiceAgent(roomId: string, userId: string, voiceProfile = 'default'): Promise<VoiceInitResponse> {

try {

if (!roomId || !userId) {

throw new Error('Room ID and User ID required for voice agent initialization')

}

const requestData = {

roomId,

userId,

voiceProfile

}

console.log(`🎤 Initializing voice agent for room: ${roomId}`)

const response = await this.apiClient.post('/api/voice/initialize', requestData)

if (!response.data.success || !response.data.agentId) {

throw new Error('Voice agent initialization returned invalid response')

}

return {

agentId: response.data.agentId,

roomId: response.data.roomId,

userId: response.data.userId,

voiceProfile: response.data.voiceProfile

}

} catch (error: any) {

const errorMessage = error.response?.data?.error || error.message || 'Voice agent initialization failed'

throw new Error(`Voice agent setup failed: ${errorMessage}`)

}

}

async sendVoiceMessage(agentId: string, message: string, messageType = 'text'): Promise<void> {

try {

if (!agentId || !message?.trim()) {

throw new Error('Agent ID and message content required')

}

const requestData = {

agentId,

message: message.trim(),

messageType

}

console.log(`💬 Sending voice message to agent: ${agentId}`)

const response = await this.apiClient.post('/api/voice/message', requestData)

if (!response.data.success) {

throw new Error('Voice message sending failed')

}

} catch (error: any) {

const errorMessage = error.response?.data?.error || error.message || 'Voice message transmission failed'

throw new Error(`Voice message failed: ${errorMessage}`)

}

}

async terminateVoiceAgent(agentId: string): Promise<void> {

try {

if (!agentId) {

console.warn('No agent ID provided for termination')

return

}

console.log(`🛑 Terminating voice agent: ${agentId}`)

const response = await this.apiClient.post('/api/voice/terminate', { agentId })

if (!response.data.success) {

console.warn('Voice agent termination returned non-success status')

}

} catch (error: any) {

console.error('Voice agent termination error:', error.response?.data || error.message)

// Don't throw here as cleanup should continue even if termination fails

}

}

// Utility method for testing API connectivity

async testConnection(): Promise<{ connected: boolean; latency: number }> {

const startTime = Date.now()

try {

await this.checkHealth()

const latency = Date.now() - startTime

return { connected: true, latency }

} catch (error) {

return { connected: false, latency: -1 }

}

}

}

export const voiceApiService = VoiceApiService.getInstance()This API service provides clean interfaces for all voice agent operations with comprehensive error handling and connection monitoring.

8. Main Voice Agent Component

Implement the primary React component that orchestrates voice agent functionality and provides the user interface.

Create voice-client/src/components/VoiceAgent.tsx:

import { useState, useEffect, useRef, useCallback } from 'react'

import { motion, AnimatePresence } from 'framer-motion'

import {

Mic, MicOff, Volume2, VolumeX, Phone, PhoneOff,

MessageSquare, Settings, Wifi, WifiOff, Activity

} from 'lucide-react'

import { VoiceAgentService } from '../services/voiceService'

import { voiceApiService } from '../services/apiService'

import type { VoiceMessage, VoiceSession, VoiceAgentStatus } from '../types/voice'

export const VoiceAgent = () => {

// Core state management

const [isInitialized, setIsInitialized] = useState(false)

const [currentSession, setCurrentSession] = useState<VoiceSession | null>(null)

const [messages, setMessages] = useState<VoiceMessage[]>([])

const [agentStatus, setAgentStatus] = useState<VoiceAgentStatus>({

state: 'idle',

currentTranscript: '',

isRecording: false,

audioLevel: 0,

connectionQuality: 'good'

})

// UI state

const [isConnecting, setIsConnecting] = useState(false)

const [showSettings, setShowSettings] = useState(false)

const [volumeLevel, setVolumeLevel] = useState(0.8)

const [microphoneEnabled, setMicrophoneEnabled] = useState(true)

// Service references

const voiceService = useRef(VoiceAgentService.getInstance())

const messagesEndRef = useRef<HTMLDivElement>(null)

// Environment configuration

const ZEGO_APP_ID = import.meta.env.VITE_ZEGO_APP_ID

const ZEGO_SERVER = import.meta.env.VITE_ZEGO_SERVER

// Initialize voice agent on component mount

useEffect(() => {

initializeVoiceServices()

return () => {

cleanup()

}

}, [])

// Auto-scroll messages

useEffect(() => {

scrollToBottom()

}, [messages])

const initializeVoiceServices = async () => {

try {

if (!ZEGO_APP_ID || !ZEGO_SERVER) {

throw new Error('ZEGOCLOUD configuration missing')

}

const engineInitialized = await voiceService.current.initializeVoiceEngine(ZEGO_APP_ID, ZEGO_SERVER)

if (!engineInitialized) {

throw new Error('Voice engine initialization failed')

}

setupVoiceEventHandlers()

setIsInitialized(true)

console.log('✅ Voice agent services initialized')

} catch (error) {

console.error('❌ Voice initialization failed:', error)

addSystemMessage('Voice initialization failed. Please refresh and try again.')

}

}

const setupVoiceEventHandlers = () => {

// Handle user voice input

voiceService.current.onMessage('userVoiceInput', (message: VoiceMessage) => {

addMessage(message)

})

// Handle agent responses

voiceService.current.onMessage('agentResponse', (message: VoiceMessage) => {

addMessage(message)

})

// Monitor voice agent status changes

voiceService.current.onStatusChange((status: VoiceAgentStatus) => {

setAgentStatus(status)

})

}

const addMessage = (message: VoiceMessage) => {

setMessages(prev => [...prev, message])

}

const addSystemMessage = (content: string) => {

const systemMessage: VoiceMessage = {

id: `system_${Date.now()}`,

content,

speaker: 'agent',

timestamp: Date.now(),

type: 'text'

}

addMessage(systemMessage)

}

const scrollToBottom = () => {

messagesEndRef.current?.scrollIntoView({ behavior: 'smooth' })

}

const startVoiceSession = async () => {

if (isConnecting || currentSession?.isActive) return

setIsConnecting(true)

try {

const roomId = `voice_room_${Date.now()}`

const userId = `voice_user_${Date.now().toString().slice(-6)}`

// Get authentication token

const { token } = await voiceApiService.getVoiceToken(userId)

// Join voice room

const joinSuccess = await voiceService.current.joinVoiceSession(roomId, userId, token)

if (!joinSuccess) {

throw new Error('Failed to join voice session')

}

// Initialize AI voice agent

const { agentId } = await voiceApiService.initializeVoiceAgent(roomId, userId)

const newSession: VoiceSession = {

sessionId: `session_${Date.now()}`,

roomId,

userId,

agentId,

isActive: true,

startTime: Date.now(),

lastActivity: Date.now()

}

setCurrentSession(newSession)

addSystemMessage('Voice agent activated! Start speaking to begin your conversation.')

console.log('✅ Voice session started successfully')

} catch (error: any) {

console.error('❌ Voice session start failed:', error)

addSystemMessage(`Failed to start voice session: ${error.message}`)

} finally {

setIsConnecting(false)

}

}

const endVoiceSession = async () => {

if (!currentSession?.isActive) return

try {

// Terminate AI agent

if (currentSession.agentId) {

await voiceApiService.terminateVoiceAgent(currentSession.agentId)

}

// Leave voice room

await voiceService.current.leaveVoiceSession()

setCurrentSession(null)

setAgentStatus({

state: 'idle',

currentTranscript: '',

isRecording: false,

audioLevel: 0,

connectionQuality: 'good'

})

addSystemMessage('Voice session ended.')

console.log('✅ Voice session ended successfully')

} catch (error) {

console.error('❌ Voice session end failed:', error)

addSystemMessage('Session ended with some cleanup issues.')

}

}

const toggleMicrophone = async () => {

if (!currentSession?.isActive) return

try {

const newState = !microphoneEnabled

const success = await voiceService.current.enableMicrophone(newState)

if (success) {

setMicrophoneEnabled(newState)

addSystemMessage(`Microphone ${newState ? 'enabled' : 'disabled'}`)

}

} catch (error) {

console.error('❌ Microphone toggle failed:', error)

}

}

const cleanup = () => {

if (currentSession?.isActive) {

endVoiceSession()

}

voiceService.current.destroy()

}

const getStatusDisplay = () => {

switch (agentStatus.state) {

case 'listening':

return { text: 'Listening...', color: 'text-green-500', icon: <Mic className="w-4 h-4" /> }

case 'processing':

return { text: 'Processing...', color: 'text-blue-500', icon: <Activity className="w-4 h-4 animate-spin" /> }

case 'speaking':

return { text: 'AI Speaking...', color: 'text-purple-500', icon: <Volume2 className="w-4 h-4" /> }

case 'error':

return { text: 'Connection Error', color: 'text-red-500', icon: <WifiOff className="w-4 h-4" /> }

default:

return {

text: currentSession?.isActive ? 'Ready' : 'Not Connected',

color: currentSession?.isActive ? 'text-green-500' : 'text-gray-500',

icon: currentSession?.isActive ? <Wifi className="w-4 h-4" /> : <WifiOff className="w-4 h-4" />

}

}

}

const getConnectionQualityColor = () => {

switch (agentStatus.connectionQuality) {

case 'excellent': return 'text-green-500'

case 'good': return 'text-blue-500'

case 'fair': return 'text-yellow-500'

case 'poor': return 'text-red-500'

default: return 'text-gray-500'

}

}

const status = getStatusDisplay()

if (!isInitialized) {

return (

<div className="min-h-screen bg-gradient-to-br from-slate-50 to-blue-50 flex items-center justify-center">

<div className="text-center">

<div className="w-16 h-16 border-4 border-blue-500 border-t-transparent rounded-full animate-spin mx-auto mb-4"></div>

<p className="text-gray-600">Initializing voice agent...</p>

</div>

</div>

)

}

return (

<div className="min-h-screen bg-gradient-to-br from-slate-50 to-blue-50">

{/* Hidden audio element for agent voice */}

<audio

id="voice-agent-audio"

autoPlay

controls={false}

style={{ display: 'none' }}

volume={volumeLevel}

/>

<div className="max-w-2xl mx-auto p-6">

{/* Header Section */}

<motion.div

initial={{ y: -20, opacity: 0 }}

animate={{ y: 0, opacity: 1 }}

className="bg-white rounded-2xl shadow-lg p-6 mb-6"

>

<div className="flex items-center justify-between">

<div className="flex items-center space-x-4">

<motion.div

animate={{

scale: agentStatus.state === 'listening' ? [1, 1.1, 1] :

agentStatus.state === 'speaking' ? [1, 1.05, 1] : 1,

backgroundColor: agentStatus.state === 'listening' ? '#10b981' :

agentStatus.state === 'speaking' ? '#8b5cf6' :

agentStatus.state === 'processing' ? '#3b82f6' : '#6b7280'

}}

transition={{ duration: 1, repeat: agentStatus.state !== 'idle' ? Infinity : 0 }}

className="w-12 h-12 rounded-full flex items-center justify-center text-white"

>

{status.icon}

</motion.div>

<div>

<h1 className="text-2xl font-bold text-gray-900">AI Voice Agent</h1>

<div className="flex items-center space-x-2">

<p className={`text-sm font-medium ${status.color}`}>

{status.text}

</p>

<span className={`text-xs ${getConnectionQualityColor()}`}>

• {agentStatus.connectionQuality}

</span>

</div>

</div>

</div>

<div className="flex items-center space-x-2">

{/* Microphone control */}

<motion.button

whileHover={{ scale: 1.05 }}

whileTap={{ scale: 0.95 }}

onClick={toggleMicrophone}

disabled={!currentSession?.isActive}

className={`p-3 rounded-full transition-colors ${

microphoneEnabled ? 'bg-green-100 text-green-600' : 'bg-red-100 text-red-600'

} disabled:opacity-50 disabled:cursor-not-allowed`}

>

{microphoneEnabled ? <Mic className="w-5 h-5" /> : <MicOff className="w-5 h-5" />}

</motion.button>

{/* Main session control */}

<motion.button

whileHover={{ scale: 1.05 }}

whileTap={{ scale: 0.95 }}

onClick={currentSession?.isActive ? endVoiceSession : startVoiceSession}

disabled={isConnecting}

className={`px-6 py-3 rounded-full font-semibold text-white transition-colors ${

currentSession?.isActive

? 'bg-red-500 hover:bg-red-600'

: 'bg-blue-500 hover:bg-blue-600'

} disabled:opacity-50 disabled:cursor-not-allowed`}

>

{isConnecting ? (

<div className="flex items-center space-x-2">

<div className="w-4 h-4 border-2 border-white border-t-transparent rounded-full animate-spin"></div>

<span>Connecting...</span>

</div>

) : (

<div className="flex items-center space-x-2">

{currentSession?.isActive ? <PhoneOff className="w-4 h-4" /> : <Phone className="w-4 h-4" />}

<span>{currentSession?.isActive ? 'End Session' : 'Start Voice Chat'}</span>

</div>

)}

</motion.button>

</div>

</div>

{/* Current transcript display */}

<AnimatePresence>

{agentStatus.currentTranscript && (

<motion.div

initial={{ height: 0, opacity: 0 }}

animate={{ height: 'auto', opacity: 1 }}

exit={{ height: 0, opacity: 0 }}

className="mt-4 p-4 bg-gradient-to-r from-blue-50 to-purple-50 rounded-lg border border-blue-200"

>

<div className="flex items-center space-x-2">

<motion.div

animate={{ scale: [1, 1.2, 1] }}

transition={{ repeat: Infinity, duration: 1.5 }}

className="w-3 h-3 bg-blue-500 rounded-full"

/>

<p className="text-blue-700 font-medium">

{agentStatus.state === 'listening' ? 'You: ' : 'AI: '}

"{agentStatus.currentTranscript}"

</p>

</div>

</motion.div>

)}

</AnimatePresence>

</motion.div>

{/* Messages Section */}

<motion.div

initial={{ y: 20, opacity: 0 }}

animate={{ y: 0, opacity: 1 }}

className="bg-white rounded-2xl shadow-lg p-6 max-h-96 overflow-y-auto"

>

{messages.length === 0 ? (

<div className="text-center py-12">

<div className="w-16 h-16 bg-gradient-to-br from-blue-500 to-purple-600 rounded-full flex items-center justify-center mx-auto mb-4">

<MessageSquare className="w-8 h-8 text-white" />

</div>

<h3 className="text-lg font-semibold text-gray-900 mb-2">

Welcome to AI Voice Agent

</h3>

<p className="text-gray-600 mb-6">

Start a voice session to have natural conversations with AI

</p>

<div className="space-y-2 text-sm text-gray-500">

<p>🎤 Natural speech recognition</p>

<p>🧠 Intelligent AI responses</p>

<p>🔊 High-quality voice output</p>

</div>

</div>

) : (

<div className="space-y-4">

{messages.map((message) => (

<motion.div

key={message.id}

initial={{ opacity: 0, y: 10 }}

animate={{ opacity: 1, y: 0 }}

className={`flex ${message.speaker === 'user' ? 'justify-end' : 'justify-start'}`}

>

<div className={`max-w-[80%] p-3 rounded-2xl ${

message.speaker === 'user'

? 'bg-blue-500 text-white rounded-br-md'

: 'bg-gray-100 text-gray-900 rounded-bl-md'

}`}>

<p className="text-sm">{message.content}</p>

<div className="flex items-center justify-between mt-1">

<span className="text-xs opacity-70">

{new Date(message.timestamp).toLocaleTimeString([], {

hour: '2-digit',

minute: '2-digit'

})}

</span>

{message.type === 'voice' && (

<Volume2 className="w-3 h-3 opacity-70" />

)}

</div>

</div>

</motion.div>

))}

<div ref={messagesEndRef} />

</div>

)}

</motion.div>

{/* Session Info */}

{currentSession?.isActive && (

<motion.div

initial={{ y: 20, opacity: 0 }}

animate={{ y: 0, opacity: 1 }}

className="mt-4 bg-white rounded-lg shadow p-4"

>

<div className="flex items-center justify-between text-sm text-gray-600">

<span>Session: {Math.floor((Date.now() - currentSession.startTime) / 1000)}s</span>

<span>Messages: {messages.length}</span>

<span className={getConnectionQualityColor()}>

Quality: {agentStatus.connectionQuality}

</span>

</div>

</motion.div>

)}

</div>

</div>

)

}9. Application Integration and Styling

Integrate all components and apply styling to create the complete voice agent application.

Update voice-client/src/App.tsx:

import { VoiceAgent } from './components/VoiceAgent'

import './index.css'

function App() {

return (

<div className="app">

<VoiceAgent />

</div>

)

}

export default AppConfigure Tailwind CSS in voice-client/src/index.css:

@tailwind base;

@tailwind components;

@tailwind utilities;

@layer base {

body {

margin: 0;

font-family: -apple-system, BlinkMacSystemFont, 'Segoe UI', 'Roboto', 'Oxygen',

'Ubuntu', 'Cantarell', sans-serif;

line-height: 1.5;

color: #1f2937;

background-color: #f8fafc;

}

* {

box-sizing: border-box;

}

#root {

width: 100%;

min-height: 100vh;

}

}

@layer components {

.app {

@apply w-full min-h-screen;

}

/* Custom scrollbar styles */

.overflow-y-auto::-webkit-scrollbar {

width: 6px;

}

.overflow-y-auto::-webkit-scrollbar-track {

@apply bg-gray-100 rounded-full;

}

.overflow-y-auto::-webkit-scrollbar-thumb {

@apply bg-gray-300 rounded-full hover:bg-gray-400;

}

}

@layer utilities {

.animate-pulse-slow {

animation: pulse 3s cubic-bezier(0.4, 0, 0.6, 1) infinite;

}

.line-clamp-2 {

overflow: hidden;

display: -webkit-box;

-webkit-box-orient: vertical;

-webkit-line-clamp: 2;

}

}

/* Custom animations for voice visualizations */

@keyframes voice-wave {

0%, 100% { transform: scaleY(1); }

50% { transform: scaleY(1.5); }

}

.voice-wave {

animation: voice-wave 1s ease-in-out infinite;

}

.voice-wave:nth-child(2) { animation-delay: 0.1s; }

.voice-wave:nth-child(3) { animation-delay: 0.2s; }

.voice-wave:nth-child(4) { animation-delay: 0.3s; }Create the Tailwind configuration file voice-client/tailwind.config.js:

/** @type {import('tailwindcss').Config} */

export default {

content: [

"./index.html",

"./src/**/*.{js,ts,jsx,tsx}",

],

theme: {

extend: {

animation: {

'bounce-slow': 'bounce 2s infinite',

'pulse-slow': 'pulse 3s cubic-bezier(0.4, 0, 0.6, 1) infinite',

}

},

},

plugins: [],

}Update the HTML template at voice-client/index.html:

<!doctype html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<link rel="icon" type="image/svg+xml" href="/vite.svg" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<meta name="description" content="AI Voice Agent - Natural voice conversations with artificial intelligence" />

<title>AI Voice Agent</title>

</head>

<body>

<div id="root"></div>

<script type="module" src="/src/main.tsx"></script>

</body>

</html>10. Testing

Launch both servers and verify your voice agent functionality across different scenarios.

Start the voice agent server:

cd voice-server

npm run devIn a separate terminal, launch the voice client:

cd voice-client

npm run devRun a Demo

Conclusion

This AI voice agent delivers smooth, natural conversations without the need for complicated setup. Users can simply talk and receive intelligent voice responses in real time.

With ZEGOCLOUD handling the complex parts—audio processing, streaming, and speech recognition—you’re free to focus on building engaging experiences instead of technical infrastructure.

The foundation is flexible and scalable: you can adjust the AI’s personality, connect it with business systems, or extend its features while keeping a reliable core for voice interactions.

FAQ

Q1: How to create an AI voice agent?

You can create an AI voice agent by combining speech recognition, natural language processing, and text-to-speech. Using platforms like ZEGOCLOUD, you can simplify this process with one SDK that manages the full voice pipeline.

Q2: Can I create my own AI agent?

Yes. With modern APIs and SDKs, anyone can build a custom AI agent. You do not need to train models from scratch since you can integrate existing AI services to handle speech and conversation.

Q3: How do I build my own AI voice?

To build your own AI voice, you need text-to-speech technology that converts responses into natural speech. ZEGOCLOUD integrates this with speech recognition and AI processing so you can create a complete voice interaction system.

Q4: What is the architecture of a voice AI agent?

A voice AI agent typically has four layers: speech recognition to capture input, natural language processing to understand meaning, decision-making powered by a language model, and voice synthesis to respond naturally. ZEGOCLOUD connects these components through a unified architecture.

Let’s Build APP Together

Start building with real-time video, voice & chat SDK for apps today!