Preface

In previous articles, we have learned the audio basics. Today, we are going to move on to the video part.

Sounds play an irreplaceable role in our daily life, work, and entertainment, but we often say that “seeing is believing”. Actually, we have been never relying solely on “sounds” to learn about the world and communicate with others. Today in an era of “judging by appearance”, we often seek to see with our own eyes or face-to-face, and that’s what video is for.

An audio and video file can convey information in a more intuitive and diversified way than an audio file alone. Video is often likened to the flesh and blood of an audio file as it offers us more choices. All audio scenarios can be upgraded by adding video elements. For instance, a voice chat can be upgraded to a video chat, radio live streaming to social live streaming, and voice lessons to video lessons. Of course, video elements are not limited to “cameras”. Screen capture and film/TV resources can also be video data sources to meet the requirements of game live streaming, watching films together, and other scenarios.

Since “video” is so valuable, we, as an audio and video app developer, have to get to the bottom of it.

Essence of video — Images

In previous lessons, we learned that sound is a wave produced by a vibrating object. We can hear a sound because our eardrums perceive the vibration of the sound wave. Thus, our study of sound starts from the capture and digitalization of sound waves. Similarly, we will also begin our video study with the essence of video and the way how we perceive videos.

How is a video produced?

I believe you must have heard of flip comic books, which contain static images on each page, usually looking plain and ordinary. However, when we flip the pages rapidly to display the images fast and continually, the static images appear to be “moving”.

The mechanism behind this is the “persistence of vision” of the human eye: When we view an object, the object is cast upon as an image on the retina first and then sent to the brain via optic nerves. As a result, the brain perceives the image of the object. When the object is removed from view, the impression of the object will stay on optic nerves for a few hundred milliseconds, rather than disappear immediately. When the image disappears and a new one appears fast enough, it will create the illusion of continuity and make “moving images”, which is what we call “video”.

From the phenomenon of flip comic books, a video is in essence a series of images displayed in sequence, and how we capture the “colors” of these images with our eyes is a way for us to perceive the video. Whether it be the black and white silent film, or the colorful one, an image must contain colors to be the “flesh and bone”.

That’s why we begin with “colors”.

Flesh and bone — Colors

We know that we can see an object because the light reflected from the object reaches our eyes. Color is a “feeling” of light perceived by the brain. Sounds are “only to be sensed”, whereas colors are “only to be seen”. To describe colors consistently and enable digital circuits to identify and process data of colors, we should define colors through digital means.

The most common definition of color is the ”three primary colors of light”. When light reaches our eyes, visual cells will produce multiple signals, including three monochromatic light signals: Red, Green, and Blue. These three colors can reproduce a broad array of colors when added together in different proportions. We also call it the RGB model. Based on the RGB model, we decide on the three color components and quantify each of them to complete the digital processing of colors.

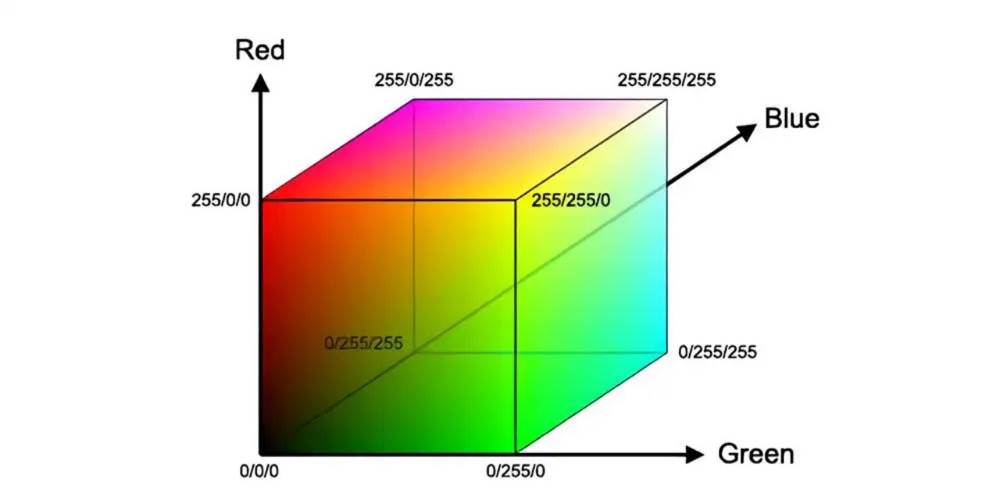

The concept of “color model” is easy to be associated with the “multi-dimensional space, multi-dimensional coordinate system”. For example, the three components of the RGB model can represent the X, Y, and Z coordinates respectively in a 3D space, and their values correspond to the X, Y, and Z coordinates, each representing a color. If we can calculate the range of each component (coordinate range), the value combinations of all the components within the range can create a color space, which contains all the colors that can be represented by the color model.

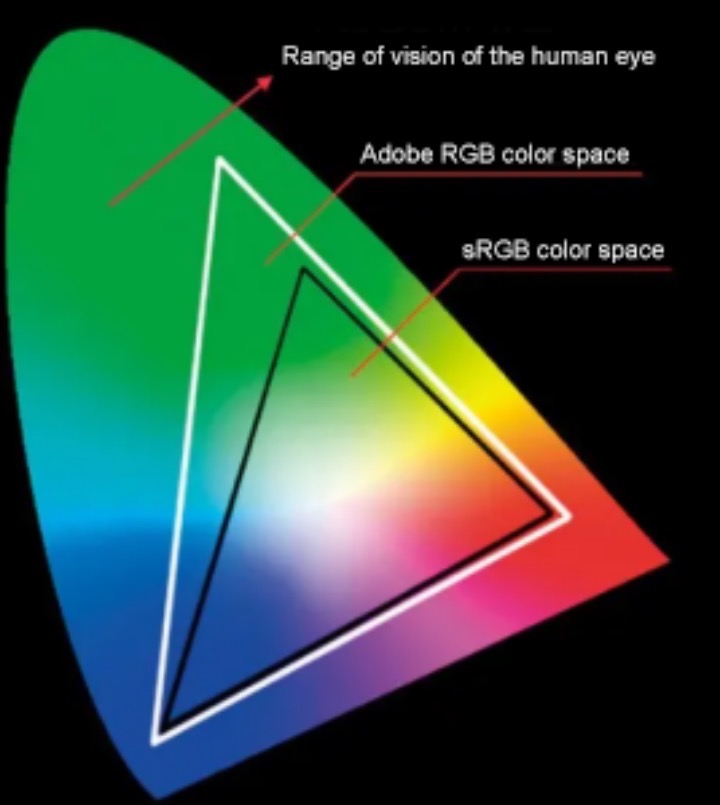

Figure 1

The human eye can distinguish a limited number of colors. Considering technology bottlenecks, the number of colors that are used and displayed in real-life applications is limited, too. We usually do not need all the colors of the color model. In different scenarios, we generally use the “sub-space” of a color as its standard color space (also referred to as “color gamut”). Different software and hardware platforms can be compatible and interconnected as long as they agree to use colors within the same color space. Otherwise, they may display the same color differently.

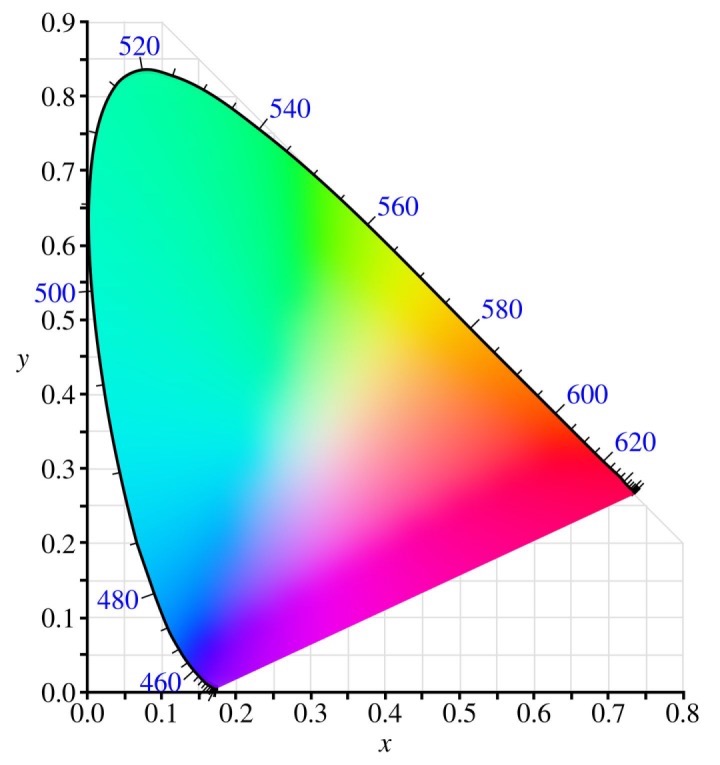

Among the color spaces developed for different areas, there’s a special one: CIE color space, which includes all colors perceived by the human eye. It is often used as a reference system for comparison between different color spaces. When we map all the colors of the CIE color space to a two-dimensional surface through a mathematical model, we can get a gamut horseshoe diagram, with the closed area indicating all colors that can be represented by the CIE color space.

CIE color space: Range of colors perceived by the human eye

Of course, except the RGB model, there are many other kinds of color models/color spaces, such as YUV, CMYK, HSV, and HSI. RGB and YUV are mainly used in RTC applications. We will focus on these two color spaces later.

Before we go deep into a color space, we have to address one problem first: How do colors create an image?

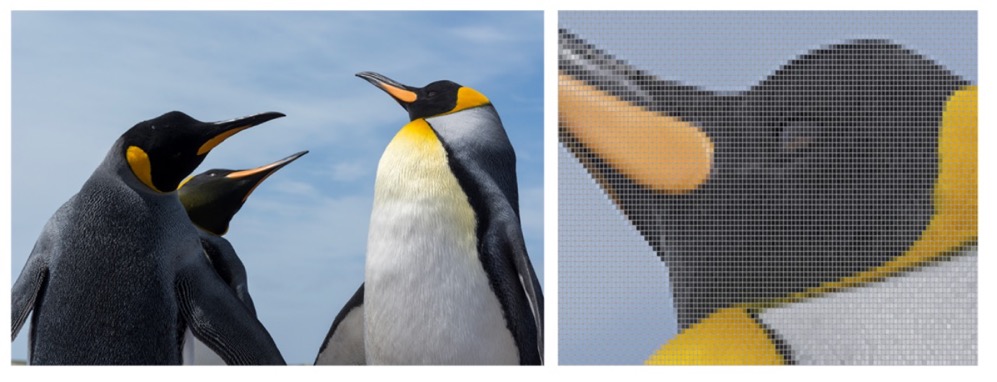

A video is essentially images, but images are not indivisible as the smallest unit is pixel. We can look at the following two images regarding the relation between image and pixel:

Figure 3

The figure on the left is a full view of the image in normal size, and the figure on the right is a local view (the head of a penguin) after being enlarged several times.

We can see that the image of “penguin” is actually formed by a series of small squares of different or similar colors arranged in a certain pattern. These small squares are known as “pixels”.

A pixel is the most basic unit and a color dot of an image. We also call it a pixel dot. Each pixel dot records the value of each component (e.g. R, G, B) of a color space. A combination of the values decides the color represented by each pixel dot. Pixel dots representing specific colors are arranged in a certain pattern to create a complete image. In other words, “pixels” constitute the colors of an image.

That’s all about the concept of pixel and its relation with the colors of an image. We will come to them later. Now let’s return to the topic of color space and find more on RGB and YUV, the color spaces most frequently used in RTC applications.

1. RGB

First, let’s get started with the RGB color space.

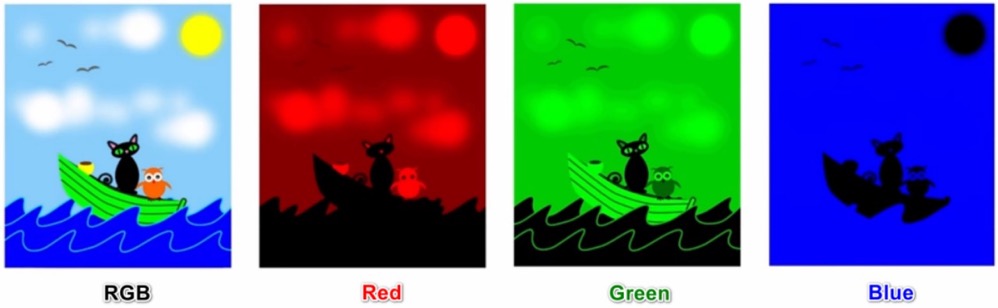

From our previous basic knowledge, the RGB color model contains three components: Red, Green, and Blue. In this model, each pixel dot of an image is stored in three components (as shown in the figure below). Each component has a different value (from 0 to 255) to make the pixel dot reproduce a different color. Based on this, the values of (255, 0, 0), (0, 255, 0), and (0, 0, 255) represent the purest red, green, and blue, respectively. A special case is that when all the three components are 0, the result is black. Conversely, if all the three components are 255, the result is white.

Figure 4

The number of colors that can be represented by RGB can reach 16.77 million, far more than those perceived by the human eye (10 million). That’s why RGB is widely used in different display areas. However, in different areas and application scenarios, different RGB-based sub color spaces are built upon the required range of colors. The most common ones are sRGB and Adobe RGB.

- sRGB and Adobe RGB

Developed by Microsoft and Hewlett-Packard in 1997, sRGB has been widely used on monitors, digital cameras, scanners, projectors, and other devices. I believe that you must have seen such indicators as 99% sRGB and 100% sRGB in the product features when purchasing a monitor. These indicators refer to the coverage of sRGB by the monitor: the higher the value, the more the colors the monitor can support. Adobe RGB was developed by Adobe in 1998, one year later than sRGB. Based on sRGB, Adobe RGB added the concept of CMYK color space (especially designed for the printing industry, including four components: Cyan, Magenta, Yellow, and Black) and has been widely used in the graphic design industry together with the entire collection of Adobe design software.

- Comparison of sRGB and Adobe RGB

To compare sRGB and Adobe RGB, we can use the gamut horseshoe diagram of the CIE color space as a reference.

As shown in the figure below, we can convert the color ranges of CIE, sRGB, and Adobe RGB into the same plane. The CIE color space is at the outermost, and sRGB and Adobe RGB are in the triangle area. We can see that sRGB and Adobe RGB have a smaller color range than CIE, but the coverage of Adobe RGB is wider than sRGB, especially in the green area (sRGB can cover about 35% of CIE, whereas Adobe RGB can cover 50%), making Adobe RGB better in terms of video shooting, image processing, and fidelity.

However, even Adobe RGB is better than sRGB, sRGB is more widely applied. Riding on Windows’ coattail, sRGB, as a predecessor, is able to carry the day with a large user base of Windows. Today, most Internet content, such as films and TVs on video websites and figures in this article, are basically displayed in 100% sRGB. When displayed on a website, an Adobe RGB image may have faded colors (compared to its original colors) because its colors are out of the color standard of the website, resulting in a loss of some color information. Even so, for most users, sRGB is enough for daily scenarios. Adobe RGB is necessary only if you need a wider color gamut to achieve higher-quality color effects (e.g. professional graphic design/video shooting).

From the perspective of application scenarios of sRGB and Adobe RGB, RGB has no doubt become part of our daily life. Despite its hegemony in capture and display, RGB is somehow straitjacketed in video processing applications.

RGB has a feature: Its three components are relevant and indispensable. That means each pixel dot must store the values of R, G, and B at the same time to display a color properly, which makes it hard for code compression. The RGB image data usually occupies a larger bandwidth in transmission and larger memory space in storage (for the importance of space and bandwidth, we have discussed in the article “Know-what and Know-how of Audio — Audio Encoding and Decoding”). For a single image, the space and bandwidth problems are barely acceptable, but for a video, the problems would be a stumbling block as a video clip with a length of one second usually contains dozens of frames, which means the space and bandwidth required would grow exponentially.

Therefore, we still need another color space to replace RGB for video processing — YUV color space. That’s what we’re going to discuss next.

2. YUV

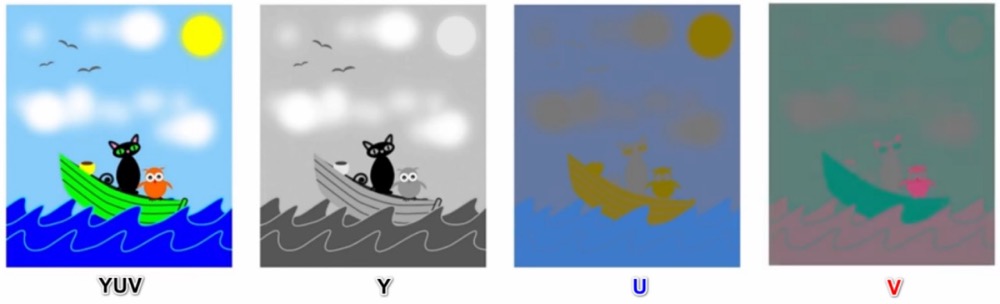

Given that the relevance of the three components of RGB has restricted its application in videos, YUV becomes a top alternative. The YUV color space also has three components: Y, U, and V. Unlike RGB, its three components are not all necessarily used to represent a color at a time.

Y is used to represent luminance and decides whether a pixel is bright or dark (or black or white) and to what extent. We only record the Y of varying brightness to represent the outline of an image (as shown by Y in the following figure). U and V represent chrominance and are used to define the hue and saturation (as shown by U and V in the following figure). A pixel with Y, U and V means the outline of the image is added with colors other than “black and white, and bright and dark”.

It can be seen that even without U and V, we can “recognize” the basic content of an image with Y only, albeit in black and white. U and V enrich the black and white image with colors. This sounds somehow familiar to us. Indeed, it reminds us of the relation between black-and-white and color TVs.

Emerging during the transition from black-and-white TV to color TV, YUV can not only record the color signals completely, but also represent the characteristics of black-and-white images by recording signals of the Y channel, thus making black-and-white and color TVs compatible and interconnected. Moreover, the human eye is more sensitive to brightness (Y) than to hue and saturation (U and V); thus we are far better at recognizing the brightness than the hue and saturation of an image. This means we can sample fewer U and V components while reserving the Y component to minimize the data volume and guarantee minimum visual distortion. It is of great help to the storage and transmission of video data. This also makes YUV a better fit for video processing than RGB.

3. Conversion between YUV and RGB

After knowing the basics and application scenarios of RGB and YUV, you may have a few doubts. RGB is mainly used for image capture and display, whereas YUV is mainly used for image storage, processing, and transmission. However, in a complete application scenario, video capture, storage, processing, transmission, and display are interrelated and indispensable. Is there any conflict if we use RGB and YUV at different links?

The answer is yes. The conflict arises from the use of two color spaces. The solution is “color space conversion”.

Conversion between RGB and YUV exists in every necessary link of the video processing process.

A video capture device generally outputs RGB data, which needs to be converted into YUV data for processing, encoding, and transmission. Similarly, a display device obtains YUV data from the transmission and decoding links first and then converts it into RGB data for display.

We don’t need to probe into how RGB and YUV convert into each other, and we just need to understand: Color space conversion is performed based on a clear standard and through some mathematical operations. However, there are many “standards”, such as BT.601 and BT.709. The conversion formula varies with standards. If you are interested in this part, you can check related documents to learn more.

In addition to basic principles, we also have to focus on the “sampling method” and “storage format” of YUV and RGB, which are very important and complicated. We’d like to go into details in the next article.

Summary

In this article, we mainly discuss the first part of the basics of image and color. I believe you have understood the relations between video, image, pixel, and color, as well as RGB and YUV and their basic principles, differences, and correlation.

Let’s Build APP Together

Start building with real-time video, voice & chat SDK for apps today!