Object segmentation technology makes background processing effects smoother.

In this article we dive into this concept and present the solution provided by ZEGOCLOUD.

Background

With the increasing demand for online communication, there has been a wide range of applications in recent years. The requirements for communication quality have been continuously raised. In addition to basic technical capabilities like sound quality, video quality, and stability, more personalized and refined demands have emerged in real-time interaction. For example, there is a need to process the background of interactive users.

1. To make the background look neat: when participants’ backgrounds are messy, they hope the conference software can blur or replace the background to make it look neater.

2. To enhance the immersive live streaming experience: when there is a need to protect the privacy of the host and create a more fitting atmosphere for the live streaming room, the host wishes that the live streaming software can replace the background with themes that are relevant to the live stream, such as e-sports or socializing themes.

3. To focus more on showcasing products: When conducting live-streaming sales, merchants hope to focus more on showcasing product information and replacing the live-stream background with product images or videos. Therefore, it is evident that the increasing demand brought by the wave of online communication requires businesses to provide personalized background processing capabilities, which is an area that needs to be urgently addressed.

What is object segmentation technology?

Object segmentation is an add-on capability provided by the ZEGO Express SDK. It uses AI algorithms to identify the content in a video frame and assigns transparency information to each pixel. The pixels in the object area are “opaque,” while the pixels outside are set as “transparent,” creating a visual effect of separating the object from the original video. ZEGOCLOUD provides two segmentation capabilities: “green-screen background segmentation” and “arbitrary background segmentation.” Different functionalities can be achieved by processing the transparency information of the segmented pixels in different ways.

1. Arbitrary background segmentation

Arbitrary segmentation refers to separating the object from the actual scene. This implementation does not require specific environmental conditions, allowing users to use it anytime. ZEGOCLOUD has selected 40 scenes, such as airports, living rooms, offices, bedrooms, train stations, and theaters. Thousands of indoor and outdoor images as training data, enabling users to achieve sound segmentation effects in various complex scenarios.

Due to platform power consumption and performance limitations, lightweight image cutout algorithms are often needed. However, lightweight algorithms typically only contain very few parameters, and their general performance may somewhat decrease. When performing image cutouts on continuous video frames, they may be affected by lighting and encoding compression, resulting in flickering effects. ZEGOCLOUD has created a large-scale video segmentation dataset of over 100,000 actual video data samples with varying lighting changes, bitrates, and resolutions. This has effectively reduced the occurrence of flickering effects.

How ZEGOCLOUD improves object segmentation

To improve the accuracy of video segmentation, ZEGOCLOUD utilized a significant amount of video data as background data. The foreground objects were randomly superimposed onto the background during the training process. Random color offset, random grayscale, random affine transformation, random Gaussian blur, and random noise were applied for data augmentation to further enhance the dataset. These techniques effectively minimized the impact of dynamic objects in real scenes on the image cutout effect.

Furthermore, ZEGOCLOUD referred to the inter-frame relationships of the previous and next frames in the algorithm, embedding a series of video temporal relationships. By utilizing implicit information from the previous frame to limit the next frame, a fade-in and fade-out effect was achieved when the object appeared and disappeared. This vastly improves the overall visual experience.

2. Green-screen segmentation

Green-screen segmentation separates the object from a background with a green screen installed. Users need to set up a green screen to achieve better edge performance than arbitrary background segmentation.

In green-screen segmentation, we often encounter green spillage problems caused by the inability to accurately predict the object’s edges. Green spillage may be caused by unreasonable lighting arrangements, and difficulty in processing the object’s boundaries cleanly, resulting in green residue. Also, the rapid movement of the object causes motion blur, causing the green screen color to overlap with the object’s color.

To solve these problems, ZEGOCLOUD developed a highly lightweight green-screen cutout algorithm using AI. The model size of this algorithm is only 1kb, and on the Snapdragon 855 platform, the CPU time is 2ms and the GPU time is 1ms.

ZEGOCLOUD object segmentation

To completely solve the green spillage problem, a dedicated green suppression module was inserted into the algorithm. This dynamically learns the mapping relationship between green spillage colors and normal colors. For the difficult-to-handle yellow and sky blue colors, during the algorithm’s training phase, ZEGOCLOUD randomly pasted color blocks of varying degrees of these two colors onto the images, forcing the algorithm to preserve these colors.

Based on these strategies, ZEGOCLOUD’s green screen cutout algorithm completely solves the problem of green spillage. It has a high tolerance for the lighting and smoothness of the green screen. Consequently, this significantly reduces the user’s usage threshold and cost.

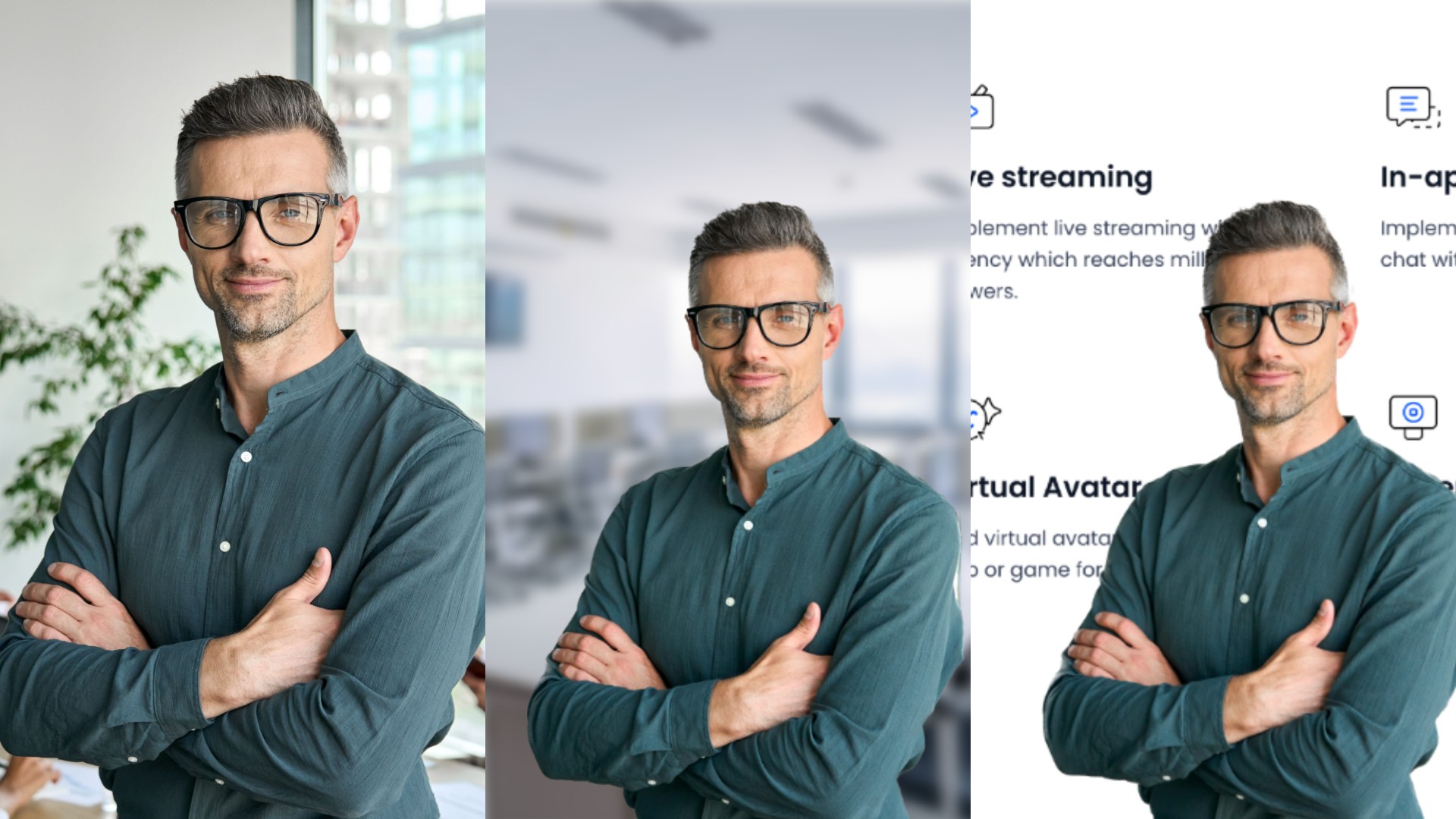

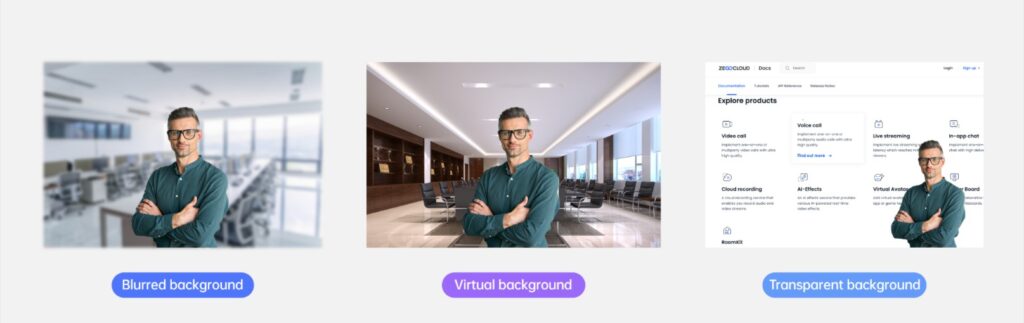

3. Multiple effect options, one-click background replacement

ZEGOCLOUD uses algorithms to separate the main object and the background, enabling users to achieve different background processing methods. It classifies different background processing effects into categories: blurred background, virtual background, and transparent background.

Blurred background: Blurs the real background outside the object area in the frame.

Virtual background: Replaces the area outside the object with custom images or colors.

Transparent background: Processes the frame’s background as transparent and then mixes the frame with other local video content into one stream. For example, it can be used for presentation mode or live-streamed product demonstrations.

The blurred background protects user privacy and allows participants to focus more on themselves in meeting scenarios. Virtual backgrounds offer greater playability than blurred backgrounds. They also cover almost all scenarios that can be applied, making them more versatile.

How is object segmentation achieved?

Alpha data transmission enables more immersive interaction to become possible.

After the image is processed by the object segmentation module to obtain the Alpha (transparency) information, ZEGOCLOUD combines Alpha data transmission with Alpha data rendering functionality. This way, it transmits the Alpha information of the image to the user and then play the stream.

Alpha is an image channel used to describe the transparency of pixels. In image processing software, the alpha channel can be used to control the transparency of each pixel in an image, achieving the effect of transparency. Alpha data transmission refers to using an additional alpha channel to transmit transparent information in digital signal transmission. This transmission method is commonly used for video signals and computer graphic images, enabling more refined image effects.

The Alpha channel helps address the issue where real-time communication stream-publishing solutions cannot handle transparent backgrounds. It transmits transparency information that can enable the receiving end to render one or more images with transparent backgrounds. This allows for the transmission of video streams with transparent backgrounds.

Combining Alpha data transmission with main body segmentation technology can extend it to more multi-person interactive scenarios. There are two common scenarios:

Scenario 1: Object segmentation in Real-time multi-person interaction

When multiple people are not in the exact location, object segmentation and channel transmission technology make it possible to make them appear in the same frame for real-time interaction. For real-time multi-person interaction in the same frame, ZEGOCLOUD provides two solutions for companies to choose from:

- The first solution is to do Alpha rendering while playing streams. The audience renders the objects of each frame on their local view, which can be applied in scenarios with high real-time requirements such as video calls and video conferences.

- The second solution is to do Alpha rendering on the server side. Use the mixing service to render the frames of various anchors onto the same background image, and then output it to the CDN, so that the frames actually played by the audience do not have an Alpha channel, and the audience’s stream-playing SDK does not require any modifications.

Scenario 2: More flexible presentation mode

When the presenter’s image covers the slides or demonstration content, other participants can freely drag the presenter’s video image through object segmentation and Alpha channel transmission technology, achieving an unobstructed display of the presentation content.

Diverse background mode to meet scenario requirements.

Whether virtual or blurred backgrounds, users’ demand for background replacement signifies that real-time interactive vendors must pursue excellence in fine-tuning their offerings. Combining object segmentation with Alpha channel transmission technology separates the object from the frame and then fuses it with virtual scenes. This breaks the traditional rectangular separation between users in real-time interactive video, enhancing the fun of the experience.

Let’s Build APP Together

Start building with real-time video, voice & chat SDK for apps today!