AI Proactive Speech: Proactively Invoke LLM or TTS

Large Language Models (LLMs) do not output text and voice proactively. Therefore, developers need to trigger the AI agent to speak based on certain rules, thereby making the real-time interactions more engaging. For example, if the user has not spoken for 5 seconds, the AI agent can speak a sentence through Text-to-Speech (TTS).

Ways for AI Agents to speak proactively:

- Trigger LLM: You can simulate a user to initiate a message, thereby enabling the AI agent to output text and voice based on context.

- Trigger TTS: You can make the AI agent speak a segment of text content, usually in a fixed pattern, such as "Hello, welcome to use ZEGOCLOUD AI Agent service."

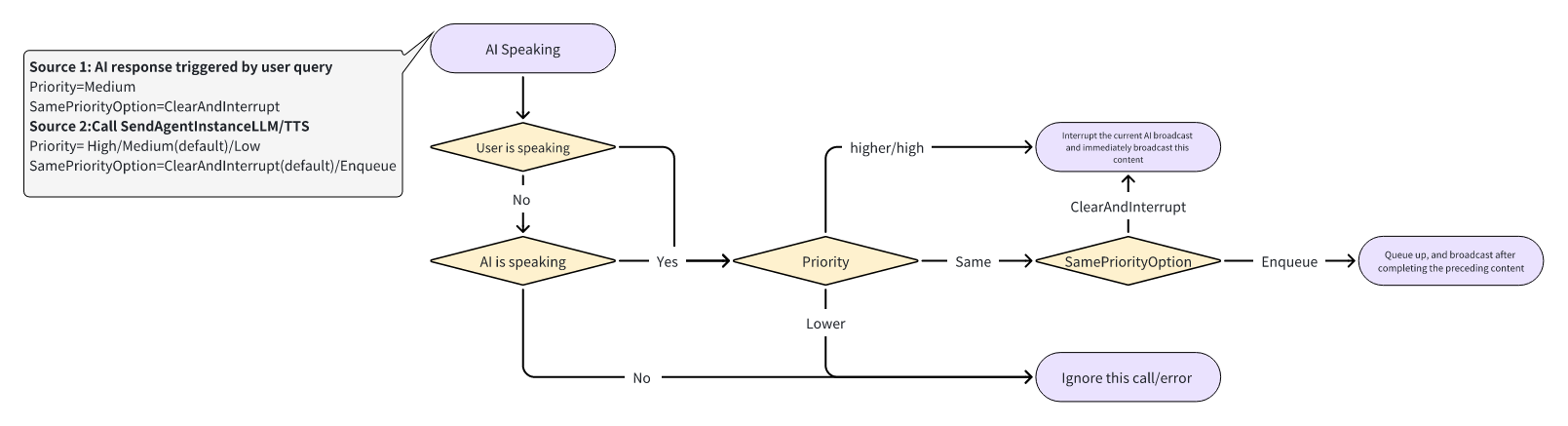

AI agent can set the priority to achieve different effects by setting two parameters (not-required):

- Priority(High、Medium、Low).

- SamePriorityOption(ClearAndInterupt、Enqueue).

Prerequisites

Completed the basic process by referring to Quick Start.

Usage

Trigger LLM and Trigger TTS sections contain all related parameters, but the example code only demonstrates the usage of required parameters.

Trigger LLM

Call the SendAgentInstanceLLM API to trigger the LLM to output text and voice.

When calling SendAgentInstanceLLM, the AI Agent server will concatenate a context, which consists of three parts:

- Placed at the front is the

SystemPrompt, the temporary system prompt for this conversation. - In the middle are the previous conversation records, the number of which is determined by

WindowSize. - At the end is the

Textset in this interface.

The text information passed to this method will not be recorded in the conversation message history, nor will it be delivered through RTC room messages. However, the responses generated by the LLM will be recorded in the conversation message history and will be delivered through RTC room messages.

The interface parameters are as follows:

| Parameter | Type | Required | Description |

|---|---|---|---|

| AgentInstanceId | String | Yes | The unique identifier of the agent instance, obtained through the response parameter of the Create An Agent Instance interface. |

| Text | String | Yes | The text content sent to the LLM service. |

| SystemPrompt | String | No | The temporary system prompt for this conversation. If not provided, it will use the SystemPrompt in the LLM parameters from Register An Agent or Create An Agent Instance. |

| AddQuestionToHistory | Boolean | No | Whether to add the question to the context. The default value is false. |

| AddAnswerToHistory | Boolean | No | Whether to add the answer to the context. The default value is false. |

| Priority | String | No | Task priority, the default value is Medium.Optional values: 1. Low: Low priority2. Medium: Medium priority3. High: High priority |

| SamePriorityOption | String | No | The interruption strategy when the same priority occurs, the default value is ClearAndInterrupt.Optional values: - ClearAndInterrupt: Clear and interrupt- Enqueue: Queue up to wait, the maximum number of queues is 5 |

Example request:

{

"AgentInstanceId": "1907755175297171456",

"Text": "How's the weather today?"

}Trigger TTS

Call the SendAgentInstanceTTS API to make the agent speak a segment of text content.

The text message passed to this interface will be recorded in the conversation message history based on the AddHistory parameter as context input for the LLM, and this message will also be delivered through RTC room messages.

The interface parameters are as follows:

| Parameter | Type | Required | Description |

|---|---|---|---|

| AgentInstanceId | String | Yes | The unique identifier of the agent instance, obtained through the response parameter of the Create An Agent Instance interface. |

| Text | String | Yes | The text content for TTS, with a maximum length of no more than 300 characters. |

| AddHistory | Boolean | No | Whether to record the text message in the conversation message history as context input for the LLM. The default value is true. |

| Priority | String | No | Task priority, the default value is Medium.Optional values: 1. Low: Low priority2. Medium: Medium priority3. High: High priority |

| SamePriorityOption | String | No | The interruption strategy when the same priority occurs, the default value is ClearAndInterrupt.Optional values: - ClearAndInterrupt: Clear and interrupt- Enqueue: Queue up to wait, the maximum number of queues is 5 |

Example request:

{

"AgentInstanceId": "1907780504753553408",

"Text": "Hello, welcome to use ZEGOCLOUD AI Agent service."

}Usage Example

Scenario 1: AI plays welcome message

Hope to play a welcome message when the user starts a voice or digital human conversation with AI.

- Ensure the user has pulled the RTC stream of the Agent instance

// Set the event handler after creating the engine

engine.setEventHandler(new IZegoEventHandler() {

@Override

public void onPlayerStateUpdate(String streamID, ZegoPlayerState state,

int errorCode, JSONObject extendedData) {

super.onPlayerStateUpdate(streamID, state, errorCode, extendedData);

if (errorCode != 0) {

Log.d("Zego", "Pull stream error: " + streamID);

return;

}

// Listen for the pull stream status change

switch (state) {

// !mark

case PLAYING:

Log.d("Zego", "Pull stream successfully, can call the interface to play the welcome message");

break;

case PLAY_REQUESTING:

Log.d("Zego", "Pulling stream: " + streamID);

break;

case NO_PLAY:

Log.d("Zego", "Pull stream stopped: " + streamID);

break;

}

}

});- Call the interface to start playing the welcome message, there are two implementation ways:

- SendAgentInstanceLLM: Combine context, let LLM play the welcome message.

{

"AgentInstanceId": "1907755175297171456",

"Text": "Say one welcome message"

}- SendAgentInstanceTTS: Use a fixed script to make the AI play the welcome message.

{

"AgentInstanceId": "1907755175297171456",

"Text": "hello"

}If the user starts speaking when playing the welcome message, the AI can be directly interrupted, and there is a probability that the AI will not be able to play the welcome message. If you want to ensure that the user can hear the welcome message, you can set:

Priority=High

For example, if you want to send the welcome message through SendAgentInstanceTTS, you can set:

{

"AgentInstanceId": "1907755175297171456",

"Text": "hello",

"Priority": "High"

}Scenario 2: Cold开场时,AI 主动发言可被用户说话打断

When both the user and AI are not speaking, the AI is expected to initiate a topic actively, and when the AI speaks, the user's speech is expected to take precedence, that is, the AI's broadcast can be interrupted by the user's speech. This can be achieved by directly calling SendAgentInstanceLLM or SendAgentInstanceTTS.

There are two ways to initiate the topic:

- Combine context, let LLM output one active speech.

Request example: for example, call the

SendAgentInstanceLLMinterface,textis "Now the user has not spoken for a while, please主动说一句话"

{

"AgentInstanceId": "1907755175297171456",

"Text": "Now the user has not spoken for a while, please say something?",

"Priority": "Medium"

}- Use a fixed script to make the AI speak.

Request example: for example, call the

SendAgentInstanceTTSinterface,textis "Why don't you speak?"

{

"AgentInstanceId": "1907755175297171456",

"Text": "Why don't you speak?",

"Priority": "Medium"

}Scenario 3: Trigger the critical node, need AI to broadcast immediately and complete all content

For example, when it is found that the time limit has been reached, regardless of whether the user and AI are speaking, it is necessary to broadcast immediately to remind the user.

Besides configuring the prompt word or TTS content of the LLM, you need to additionally configure:

- Priority=High

- SamePriorityOption=ClearAndInterrupt

Request example: for example, call the SendAgentInstanceTTS interface, text is "Time's up, please check immediately."

{

"AgentInstanceId": "1907755175297171456",

"Text": "Time's up, please check immediately.",

"Priority": "High",

"SamePriorityOption": "ClearAndInterrupt"

}Scenario 4: AI is broadcasting, needs to broadcast additional content after broadcasting the current content

For example, when the AI is replying to the user's question, it is hoped that after answering the current question, the AI will immediately ask the user if there are any other ideas.

Besides configuring the prompt word or TTS content of the LLM, you need to additionally configure:

- Priority=Medium

- SamePriorityOption=Enqueue

Request example: for example, call the SendAgentInstanceTTS interface, text is "Do you have any other ideas?"

{

"AgentInstanceId": "1907755175297171456",

"Text": "Do you have any other ideas?",

"Priority": "Medium",

"SamePriorityOption": "Enqueue"

}