Use AI Agent with RAG

Background

In practical applications of large language models (LLMs), relying solely on a single LLM for input and output often fails to achieve the desired results. Common issues include AI errors, irrelevant or inaccurate answers, and even potential risks to business operations.

Utilizing an "external knowledge base" can effectively address these challenges faced by LLMs. Retrieval-Augmented Generation (RAG) is one such technique.

This article will introduce how to use AI Agent in combination with RAG to provide support for the external knowledge base of the AI Agent.

Solution

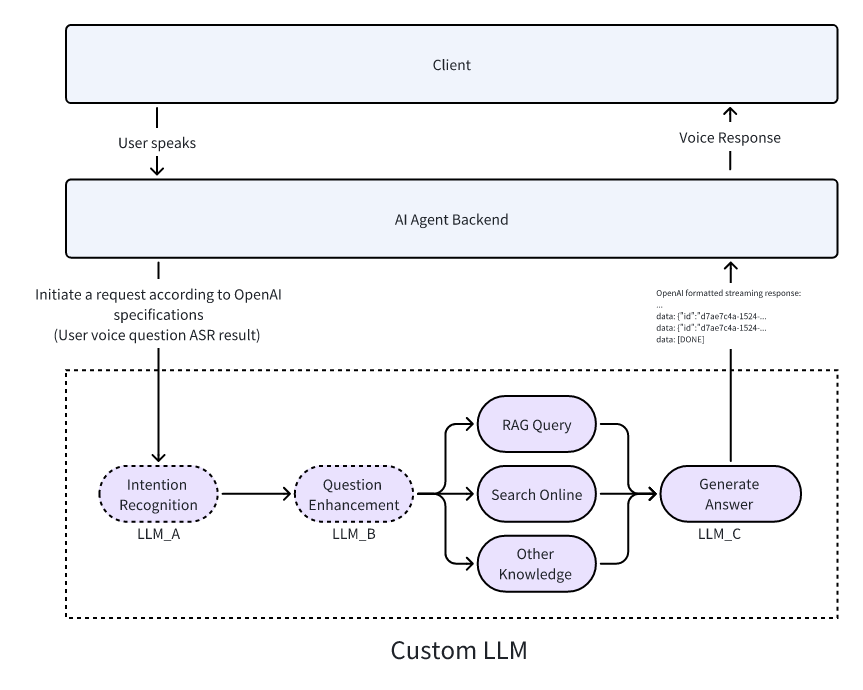

The implementation is illustrated in the following diagram:

- The user speaks, and the audio stream is published to ZEGOCLOUD Real-Time Audio and Video Cloud via the ZEGO Express SDK.

- The AI Agent backend receives the audio stream, converts it to text, and then sends a ChatCompletion request to your custom LLM service following the OpenAI protocol.

- Your custom LLM service performs RAG retrieval upon receiving the request, combines the retrieved fragments with the user's latest question, and calls the LLM to generate a streaming response.

- The AI Agent backend converts the LLM's streaming response into an audio stream, pushes it to the client via the real-time audio and video cloud, and the user hears the AI Agent's answer.

- The "Intent Recognition" and "Question Enhancement" steps in the diagram are not mandatory, but it is recommended to implement them to improve the accuracy of AI Agent's answers.

- The diagram also shows "Search online" and other steps parallel to "RAG Query." These steps are optional, and you can implement them based on your business needs by following the RAG query process.

- LLM_A, LLM_B, and LLM_C in the diagram illustrate that you can use different LLM provider models at each node based on performance and cost considerations. Of course, you can also use the same LLM provider model throughout.

Example Code

The following is the example code for the business backend that integrates the real-time interactive AI Agent API. You can refer to the example code to implement your own business logic.

Includes the basic capabilities of obtaining ZEGO Token, registering an agent, creating an agent instance, and deleting an agent instance.

The following is the example code for the client that integrates the real-time interactive AI Agent API. You can refer to the example code to implement your own business logic.

Includes the basic capabilities of login, publish stream, play stream, and exit room.

Includes the basic capabilities of login, publish stream, play stream, and exit room.

Includes the basic capabilities of login, publish stream, play stream, and exit room.

Includes the basic capabilities of login, publish stream, play stream, and exit room.

The following video demonstrates how to run the server and client (iOS) example code and interact with the agent in real-time voice.

- The server must be deployed to a public network environment that can be accessed, and do not use localhost or LAN addresses.

- The environment variables must use the environment variables of the rag branch when deploying.

Implement Server Functionality

Implement RAG Retrieval

There are several ways to implement RAG retrieval. The following are some common solutions:

This article takes RAGFlow as examples to introduce the implementation methods.

Deploy RAGFlow

Please refer to the RAGFlow Deployment Documentation to deploy RAGFlow.

Create Knowledge Base

Please refer to the RAGFlow Create Knowledge Base Documentation to create a knowledge base.

Implement RAG Retrieval Interface

Please refer to the RAGFlow Retrieve chunks interface documentation to implement the RAG retrieval interface.

Example code environment variable description:

- RAGFLOW_KB_DATASET_ID: After clicking on the knowledge base, the ID value of the request parameter after the URL is the knowledge base ID.

- RAGFLOW_API_ENDPOINT: Click the upper right corner to switch to the system settings page->API->API server

- RAGFLOW_API_KEY: Click the upper right corner to switch to the system settings page->API->Click the "API KEY" button->Create a new key

export async function retrieveFromRagflow({

question,

dataset_ids = [process.env.RAGFLOW_KB_DATASET_ID!],

document_ids = [],

page = 1,

page_size = 100,

similarity_threshold = 0.2,

vector_similarity_weight = 0.3,

top_k = 1024,

rerank_id,

keyword = true,

highlight = false,

}: RetrieveParams): Promise<RagFlowResponse> {

// Check necessary environment variables

if (!process.env.RAGFLOW_API_KEY || !process.env.RAGFLOW_API_ENDPOINT) {

throw new Error('Missing necessary RAGFlow configuration information');

}

// Check necessary parameters

if (!dataset_ids?.length && !document_ids?.length) {

throw new Error('dataset_ids or document_ids must provide at least one');

}

// Build request body

const requestBody = {

question,

dataset_ids,

document_ids,

page,

page_size,

similarity_threshold,

vector_similarity_weight,

top_k,

rerank_id,

keyword,

highlight,

};

try {

// Use the correct endpoint format in the official documentation

// !mark

const response = await fetch(`${process.env.RAGFLOW_API_ENDPOINT}/api/v1/retrieval`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${process.env.RAGFLOW_API_KEY}`,

},

body: JSON.stringify(requestBody),

});

if (!response.ok) {

const errorData = await response.text();

throw new Error(`RAGFlow API error: ${response.status} ${response.statusText}, detailed information: ${errorData}`);

}

const data: RagFlowRetrievalResponse = await response.json();

// Process the retrieval results and convert them into concatenated text

let kbContent = '';

// The returned chunk may be many, so it is necessary to limit the number of returned chunks

let kbCount = 0;

// !mark(1:6)

for (const chunk of data.data.chunks) {

if (kbCount < Number(process.env.KB_CHUNK_COUNT)) {

kbContent += `doc_name: ${chunk.document_keyword}\ncontent: ${chunk.content}\n\n`;

kbCount += 1;

}

}

return {

kbContent,

rawResponse: data

};

} catch (error) {

console.error('RAGFlow retrieval failed:', error);

throw error;

}

}Implement Custom LLM

Create an interface that conforms to the OpenAI API protocol.

Register Agent and Use Custom LLM

When registering the agent (RegisterAgent), set the custom LLM URL, and require the LLM to answer the user's question based on the knowledge base content in the SystemPrompt.

// Please replace the LLM and TTS authentication parameters such as ApiKey, appid, token, etc. with your actual authentication parameters.

async registerAgent(agentId: string, agentName: string) {

// Request interface: https://aigc-aiagent-api.zegotech.cn?Action=RegisterAgent

const action = 'RegisterAgent';

// !mark(4:9)

const body = {

AgentId: agentId,

Name: agentName,

LLM: {

Url: "https://your-custom-llm-service/chat/completions",

ApiKey: "your_api_key",

Model: "your_model",

SystemPrompt: "Please answer the user's question in a friendly manner based on the knowledge base content provided by the user. If the user's question is not in the knowledge base, please politely tell the user that we do not have related knowledge base content."

},

TTS: {

Vendor: "ByteDance",

Params: {

"app": {

"appid": "zego_test",

"token": "zego_test",

"cluster": "volcano_tts"

},

"audio": {

"voice_type": "zh_female_wanwanxiaohe_moon_bigtts"

}

}

}

};

// The sendRequest method encapsulates the request URL and public parameters. For details, please refer to: https://doc-zh.zego.im/aiagent-server/api-reference/accessing-server-apis

return this.sendRequest<any>(action, body);

}Create Agent Instance

Use the registered agent as a template to create multiple agent instances to join different rooms and interact with different users in real time. After creating the agent instance, the agent instance will automatically login the room and push the stream, at the same time, it will also pull the real user's stream.

After creating the agent instance successfully, the real user can interact with the agent in real time by listening to the stream change event and pulling the stream in the client.

Here is an example of calling the create agent instance interface:

async createAgentInstance(agentId: string, userId: string, rtcInfo: RtcInfo, messages?: any[]) {

// Request interface: https://aigc-aiagent-api.zegotech.cn?Action=CreateAgentInstance

const action = 'CreateAgentInstance';

const body = {

AgentId: agentId,

UserId: userId,

RTC: rtcInfo,

MessageHistory: {

SyncMode: 1, // Change to 0 to use history messages from ZIM

Messages: messages && messages.length > 0 ? messages : [],

WindowSize: 10

}

};

// The sendRequest method encapsulates the request URL and public parameters. For details, please refer to: https://doc-zh.zego.im/aiagent-server/api-reference/accessing-server-apis

const result = await this.sendRequest<any>(action, body);

console.log("create agent instance result", result);

// In the client, you need to save the returned AgentInstanceId, which is used for subsequent deletion of the agent instance.

return result.AgentInstanceId;

}After completing this step, you have successfully created an agent instance. After integrating the client, you can interact with the agent instance in real time.

Implement Client Functionality

Please refer to the following documents to complete the integration development of the client:

Quick Start

Quick Start

Quick Start

Quick Start

Congratulations🎉! After completing this step, you have successfully integrated the client SDK and can interact with the agent instance in real time. You can ask the agent any question, and the agent will answer your question after querying the knowledge base!